Vatsal Sharan: Sample Amplification: Increasing Dataset Size even when Learning is Impossible (USC)

HTML-код

- Опубликовано: 4 окт 2024

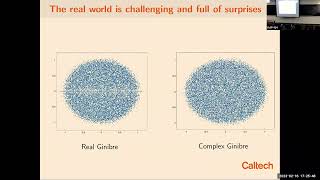

- Is learning a distribution always necessary for generating new samples from the distribution? To study this, we introduce the problem of “sample amplification”: given n independent draws from an unknown distribution, D, to what extent is it possible to output a set of m datapoints that are indistinguishable from m i.i.d. draws from D (where m is larger than n)? Curiously, we show that nontrivial amplification is often possible in the regime where the number of datapoints n is too small to learn D to any nontrivial accuracy. We prove upper bounds and matching lower bounds on sample amplification for a number of distribution families including discrete distributions, Gaussians, and any continuous exponential family.

This is based on joint work with Brian Axelrod, Yanjun Han, Shivam Garg and Greg Valiant.