Rag Evaluation with Milvus, Ragas, Langchain, Ollama, Llama3

HTML-код

- Опубликовано: 10 сен 2024

- About the session

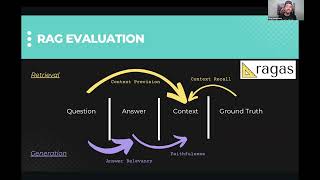

Retrieval Augmented Generation (RAG) enhances chatbots by incorporating custom data in the prompt. Using large language models (LLMs) as judge has gained prominence in modern RAG systems. This talk will demo Ragas, an open-source automation tool for RAG evaluations. Christy will talk about and demo evaluating a RAG pipeline using Milvus and RAG metrics like context F1-score and answer correctness.

Topics Covered

- Foundation Model Evaluation vs RAG Evaluation

- Do you need human-labeled ground truths?

- Human Evaluation vs LLM-as-a-judge Evaluations

- Overall RAG vs RAG component Evaluations

- Example of different Retrieval methods with Evaluation

- Example of different Generation methods with Evaluation

▼ ▽ LINKS & RESOURCES

Notebook: github.com/mil...

Blog - Rag Evaluation using Ragas: / using-evaluations-to-o...

Blog - Exploring RAG: Chunking, LLMs, and Evaluations: zilliz.com/blo...

Ragas GitHub: github.com/exp...

Milvus GitHub: github.com/mil...

▼ ▽ JOIN THE COMMUNITY - MILVUS Discord channel

Join this active community of Milvus users to get help, learn tips and tricks on how to use Milvus, or just get to be part of a vibrant community of smart developers! / discord

▶ CONNECT WITH US

X: / zilliz_universe

LINKEDIN: / zilliz

WEBSITE: zilliz.com/

Thank You for your informative seminar. I will also try to run your 'Demo Notebook'.

Great seminar, will run your notebook to learn more