The Softmax Activation Function | Deep Learning baiscs

HTML-код

- Опубликовано: 8 сен 2024

- Related videos - • All-in-one Activation ...

Deep Learning Playlist - tinyurl.com/4a...

In this video, we'll explore:

Why Sigmoid is not ideal for the output layer in multiclass classification ❌.

Using the Softmax function in real-world examples:

Stock market decisions 📈: Buy, Sell, or Hold.

Handwritten digit recognition ✏️: Classifying digits from 0 to 9.

Why Sigmoid Falls Short in Multiclass Classification 🚫

The sigmoid function is great for binary classification but not so much for multiclass problems. Here's why:

Sigmoid outputs values between 0 and 1 but doesn't ensure the probabilities add up to 1.

This can lead to confusion when interpreting results, especially when deciding between multiple classes.

Enter Softmax 🌈✨

The Softmax function is perfect for multiclass classification! Here's why:

Outputs Probabilities: Ensures all output values add up to 1, making it easy to interpret them as probabilities.

Example: For our stock market scenario, Softmax will help decide the probability of buying, selling, or holding a stock. Similarly, for digit recognition, it provides a probability distribution over all 10 digits.

What We Expect from an Output Activation Function 🧐

Probability-like Values: Outputs should look like probabilities.

Sum to 1: The sum of the outputs should be 1, forming a valid probability distribution.

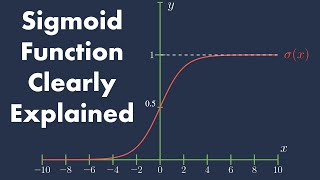

Visualizing Sigmoid and Softmax 📉📈

We'll show you a graphical representation of the sigmoid function for a 3-class classification problem and explain its limitations.

Deep Dive into Softmax 💡

We'll closely examine Softmax and its properties:

Non-linearity: Softmax is non-linear ✅.

Differentiability: Softmax is differentiable ✅.

Zero-centeredness: Softmax is not zero-centered ❌.

Computational Efficiency: Softmax isn't the most efficient computationally ❌.

Saturation: Softmax can saturate, which isn't ideal, but it's manageable ❌.

Join us for this detailed yet simple tutorial on Softmax and understand why Softmax is the go-to activation function for output layer in case of multiclass classification tasks. 📚

Don't forget to like 👍, share ↗️, and subscribe 🔔 for more insightful videos!

thanks FOE THIS ❤❤❤❤❤❤❤❤

Glad you liked it 👍