Trevor Hastie - Gradient Boosting Machine Learning

HTML-код

- Опубликовано: 26 авг 2024

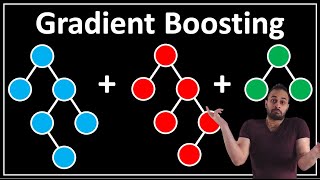

- Professor Hastie takes us through Ensemble Learners like decision trees and random forests for classification problems. Don’t just consume, contribute your code and join the movement: github.com/h2oai

User conference slides on open source machine learning software from H2O.ai at: www.slideshare....

Why did video editor put the inset video right in the middle over the last line of the slides? Should have put video so that it would not block the slides, like in in the corner.

BIg Fan of Prof. Hastie! Genius Statistician indee!

Great lecture, got to revise the basics

Thanks prof 🙌

it's funny how boosting applies to real life---like cleaning my basement of 20 year accumulation of stuff and having emotional parameters attached--basically a divide and conquer method called boosting while one processes painful emotion to stuff predictors out of each diminished remainder pile of stuff. at the end, you'll say "phew. that was not as herculean as cleaning the augean stables."

I didnt know he had a South African accent! Greetings from Africa.

I know that profesor Trevor Hastie is good but if you are here for learning about Gradient Boosting then this is not the video for you. The lecture is only of 30mins and rest of the 15mins. is Q&As. It only gives a very brief overview of Gradient Boosting and also covers other algorithms which doesn't help the title.

Thanks

Any resource or youtube videos where i can learn GBM and XGBOOST ?

This guy is awesome: he knows his stuff quite well and can explain it great!

He is the only one who knows his stuff.

Excellent advice in Q & A. Thank you.

Incredible talk.

great presentation

Amazing lecture. Thank you!

oh yeah

At 9:40, why is bias slightly increased for bagging just because the trees are shallower? If it were just a single tree then, yes bias would be increased with a shallow tree vs a deep tree. However, if the definition of bagging is to average the results of N shallow trees, then shouldn't the definition of bias also take into account that we are defining the model as using N trees???

Shallow trees tend to miss out the overall tracking of the pattern thus a little increase in the biasness. Ensembling did reduced the biasness but the shallowness will have it's contribution too in increasing the biasness. Tradeoff.

Bagging is poor man's Bayes .. haha ;)

Very good talk! Congrats!

Brilliant!! :-)

Legend 🙏

嗄