Transformer models and BERT model: Overview

HTML-код

- Опубликовано: 4 июн 2023

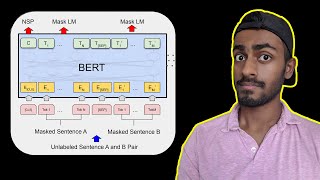

- Watch this video to learn about the Transformer architecture and the Bidirectional Encoder Representations from Transformers (BERT) model. You learn about the main components of the Transformer architecture, and the different tasks that BERT can be used for, such as text classification, question answering, and natural language inference.

Enroll on Google Cloud Skills Boost to view the lab walkthrough and participate in a hands-on lab!

Enroll on Google Cloud Skills Boost → goo.gle/3Wk3jnC

View the Generative AI Learning path playlist → goo.gle/LearnGenAI

Subscribe to Google Cloud Tech → goo.gle/GoogleCloudTech  Наука

Наука

Subscribe to Google Cloud Tech → goo.gle/GoogleCloudTech

Important info. I'd love to see a similar video but with real life examples to illustrate the points.

need more deep dive on how BERT works. Can you guide regarding more references. ?

well explained, but I'd love to see a real life examples to illustrate the points.

I would love to see a real-time example to illustrate the step

Is that a practical exercise in which we have to predict the missing word?:@2:43

“ the encoding component is a stack of encoder of the same number” of what?I assume she meant “ of same structure ( entirely identical layers) “ ? It kind of confirmed later in the video…

I there some reviewing these videos before they get out? the previous video on attention mechanism was absolutely confusing partially to the fact that the notations used were not matching the words of the presenter !

Since I don't have any background on transformers, I get completely lost at the point where you're explaining the query, key and value vectors and how the weights for these are determined at training time. I had to resort to questioning bard about this in more detail, but am still lost, although questioning bard helped in getting some understanding of what these 3 vectors are.

Can you more clearly explain how the adjustment to the weights of the query, key and value matrices differs during backpropagation?

explained it very well and the use of relavent examples is awesome.thank you so much.your work is very much appreciated.

Fantastic video in a such a short time can explain this much details is incredible. Thank you

Thank you for the kind words! 🤗 We're glad you found this video helpful!

8:15 What does it mean? 12 and 24 layers of transformers in BERT and then there is Transformer with 6.. 6 layer of encoder/decoder right? not same layers as BERT. layers in BERT are transformers but for transformer itself has 6 encoder/decoders.

Since they're referencing the original transformer architecture, the 6 layers refer to the encoder part of the transformer (encoder has six layers in the original paper: 2x self-attention, 2x feed-forward and 2x normalization layer after attention and feed-forward). BERT is an encoder-only transformer model

At 2:45 she says the OG research paper on transformer had 6 stacks of encoders stacked on top of each other... At 8:12 by saying `6 layers in the original transformer` I think she means 6 encoders on top of each other. They should not have used layers there because they already used that word differently to describe the two layers within each encoder (which are self-attention and feedforward layers).

Also transformer doesn't denote a single encoder or a single layer of encoder-decoder pair. It rather represents the whole model with all its encoders and decoders.... So BERT is an advanced type of transformer model which has more encoder layers than the original transformer. So each layer within BERT can't be called transformer.

[UPDATE] the layers within each encoder such as self-attention and feedforward are actually called sublayers... So it makes sense `layers` in a transformer refers to the stack of encoders and each layer within an encoder is called a `sublayer`.

How "Next Sentence Prediction" task is named as NPS, can you elaborate ? 9:29

Thanks for the video explaining Transformer models and BERT. Good summary and highlevel description. Small nitpick: @9:03, abbreviation for "next sentence prediction" should be NSP, but slide has NPS.

Well explained ❤

1:08 Isn't LSTM proposed at 1997?

A Lifesaver...

joss

Confusing

6:44

Is it a AI generative model made this video?

Probably, all the information are correct, but:

1. It is very much NOT clear how they connect to each other

2. There is great emphasis on non relevant highly technical flows while keeping out description of what is the idea behind this structure, what is the motivation and advantages of this structure. Explain HOW it solves the problems.

This video is providing quite a lot of useless information.

For those who are familiar with the subject, it is way to basic; and to those that are not, it ‘gives’ nothing. No understanding what so ever.

If you at Google use AI to generate your videos, at least review them before publishing.

Moreover, her voice is like a voice of a typing machine.

and the stress in some words is so off like "percentage", "component", "develop", and some others are said with the wrong stress.

Why are all videos explaining transformers so frocking boring and uneducative!!!

It's such a bad explanation... No examples and just reading the script. I totally get lost listening to it