MVGrasp: Real-Time Multi-View 3D Object Grasping in HighlyCluttered Environments

HTML-код

- Опубликовано: 10 сен 2024

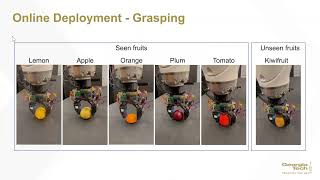

- In this paper, we propose a multi-view deep learning approach to handle robust object grasping in human-centric domains. In particular, our approach takes a point cloud of an arbitrary object as an input, and then, generates orthographic views of the given object. The obtained views are finally used to estimate pixel-wise grasp synthesis for each object. We train the model end-to-end using a synthetic object grasp dataset and test it on both simulation and real-world data without any further fine-tuning. To evaluate the performance of the proposed approach, we performed extensive sets of experiments in four everyday scenarios, including isolated objects, packed items, piles of objects, and highly cluttered scenes. Experimental results show that our approach performed very well in all simulation and real-robot scenarios. More specifically, the proposed approach outperforms previous state-of-the-art approaches and achieves more than 90% success rate in all simulated and real scenarios, except for the pile of objects which is 82%. Additionally, our method demonstrated reliable closed-loop grasping of novel objects in a variety of scene configurations.

![[DOKKAN BATTLE] Worldwide Campaign Announcement Video Part 2!](http://i.ytimg.com/vi/JpT5Voak6WA/mqdefault.jpg)