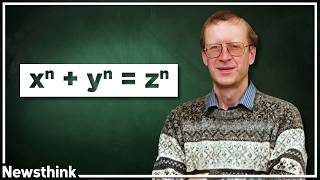

Spinors for Beginners 6: Pauli Vectors and Pauli Matrices

HTML-код

- Опубликовано: 9 июл 2024

- Full spinors playlist: • Spinors for Beginners

Leave me a tip: ko-fi.com/eigenchris

Powerpoint slide files + Exercise answers: github.com/eigenchris/MathNot...

Sources:

Spinors in 3D from Wikipedia: en.wikipedia.org/wiki/Spinors...

An Introduction to Spinors: arxiv.org/pdf/1312.3824.pdf

Spinors for Everyone PDF: hal-cea.archives-ouvertes.fr/...

A Child's Guide to Spinors PDF: www.weylmann.com/spinor.pdf

0:00 Introduction

1:02 Pauli Matrix Properties

5:48 Pauli Vector Reflections

11:11 Pauli Vector Rotations

17:24 Proof of double-sided SU(2) formula

23:28 Form of SU(2) matrices, parameter counting

25:32 Summary

I like how you right off the bat show that Pauli matrices are like unit vectors endowed with the special property that you can actually multiply them (and that therefore squaring a Pauli vector amounts to finding the magnitude squared). Maybe it's just me but I think it's very important that the Pauli matrices are introduced as initially abstract objects, that can be cast in a more familiar representation of matrices, lest I start to look way too deep into what it means that 2×2 matrices are now our vectors.

I'll get to the more abstract approach when I get to Clifford Algebra. For now I'll be treating them as matrices.

11:53 this blew my mind beyond repair; not that rotation can be viewed as two reflections, but the angle doubling relationship here that connects back to the behaviour of spinors

I've struggled for so long to understand it all, but thanks to you it's all falling into place now. Thank you so much for the videos.

saved my day! thank you! Straight direct to the point. actually explaining something. and fast, my brain stays focused and gets happy 😁😊

I'm loving how comprehensive this series is

Babe wake up a new Eugenchris video just dropped

Very clear interpretation with tone posed and pitched with clarity and pace moderate for voice auditory lobe reception and hippocampus formation spatial short and long term memory transition. All well practiced. And the content is logical. Recommend more Conceptualization. Since computation must lead to a Concept. A La Alex Grothendieck. Du boir Marie.

in 20 years i have not found a better explanation on spinors than this video series. you break it down to the most crucial properties, and from what i get you leave all the irrelevant rest. at least i really have the feeling i understand step by step why the spin is formulated the way it is. (SO(3)->SU(2)->Paulivector->Spinor). i come back at some of your videos and watch them again. i wish you many many more subscribers. there are very very few channels as didactic as this.

I discovered for myself that the Pauli Matrices are not as 'arbitrary' as they may seem when I ended up rederiving them from scratch.

I had been exploring geometric algebras (clifford algebras) after being introduced to them by sudgylacmoe's great video "a swift introduction to geometric algebra", but found remembering the entirely new algebraic rules a little confusing. However, I had remembered that the imaginary unit 'i' can be represented by a 2x2 matrix (and obviously the identity matrix acts like the real unit '1'), so wondered whether or not there would be 2x2 matrices that acted like 2D G.A.'s 'x' and 'y' vectors. I reasoned there could be, since a 2x2 matrix has four degrees of freedom -- only two of which were used by '1' and 'i'. Indeed, after solving the relevant systems of equations (xy = -yx and x^2 = y^2 = 1), I found a pair that worked! (These turned out to be -σ_z and σ_x later.)

I kept exploring past that, trying to find a basis for 3D G.A., which at first led me down the futile path of 2x2x2 matrices (which have the same issue as vectors, in that multiplication either bumps you up a dimension (outer product) or down one (inner product). However, 3D G.A. has twice the basis elements as 2D G.A. (1, x, y, z, xy, yx, xz, & xyz compared to just 1, x, y, & xy), so if I could somehow "augment" the 2x2 matrices I found for 2D G.A. with a 2nd degree of freedom for each term, that would work! Since complex numbers are an algebra that is 2D but also 'plays nicely' (often more nicely than the reals), I checked if 2x2 complex matrices worked and indeed they did! I noticed that they looked similar to the Pauli Matrices, and they turned out to be equivalent!

(I had labelled x = -σ_z, y = σ_x, and z = -σ_y)

I think it's amazing how these seemingly 'arbitrary' matrices actually appear quite naturally when exploring the maths involved in 3D space. I am by no means a professional mathematician, so the fact I could stumble across them entirely on my own shows how fundamental they actually are!

P.s. This series has been really awesome so far! I've learnt a lot, and my exploration of other subjects (such as G.A., mentioned here) has been helped a lot by the added context and detail you've presented! Looking forward to what comes next (especially when you get to the Clifford Algebra perspective, as that will probably inspire my explorations even further)!

Edits: Small typos and clarifying the basis I found.

It's pretty amazing that you were able to come up with them on your own like that. In this video I'm sort of handing them to the viewer on a silver platter, so they probably seem a bit mysterious, but I'll be taking an approach similar to what you mentioned for introducing them again in the context of Clifford Algebras.

Hi. Good for you. It's great when the self-taught achieve something for their own, demostrating that math may be the most "democratic" field of study there exist, with just pencil and paper.

Also, for the weird "rules", just think that Clifford just took Grassman Algebra, and Quaternion algebra, set the square of vector basis equal to 1, and that's it. He solve the akward geometric of quaternion, which is hyperbolic, and make it euclidean for vectors and hyperbolic for bivectors.

Cool. I remember kind of coming up with Clifford Algebra when I didn’t know it was called that. After learning calc 3 and linear algebra, I noticed that to define a surface integral over an arbitrary m-dimensional “hyper surface” embedded in an n-dimensional euclidian space, I needed to calculate the area of a “hyper-parallelogram” with vectors as sides. I just wrote pseudo-determinants of funny matrices with unit vector symbols in some rows and scalars in the others. I later discovered the result was called a wedge product. To see that the magnitude of the wedge is a volume you just do something like gaussian elimination to make an orthogonal wedge.

Okay. I realized this was not a full Clifford Algebra but a differential form which is a sub-algebra of a Clifford Algebra. But the idea of building up little hyper-parallelograms and being able to get the hyper-volume by doing a self-inner-product is infinitely better than the silly cross product that only works when n is exactly 3. Anyways, building up hyper-spheres or hyper-tori and finding higher dimensional volumes and surface areas is an interesting project.

I'm in great debt of yours. Your videos helped me a lot. Can't Thank you enough.

thank you for teaching everyone the negative sandwich operation we will be forever grateful

You might like videos 11 and 12 in this series. They are about Clifford Algebras. A lot of the same stuff here, but with no matrices. Just symbols.

So many things here are reminding me of geometric algebra. Especially that sandwich product for reflections!

Yup. I go over all this again in the language of geometric algebra in videos 11-15, which I'm still working on. (Making #14 now.)

@@eigenchris I feel so dumb right now. I JUST remembered they’re Clifford algebras. lol

my brain is not big enough to comprehend this fully. i've been watching this video and for the past two weeks trying to understand, but alas i am not this comfortable with linear algebra and complex numbers to throw them together so casually. gotta study up, i hope i can understand this video and the rest of the series soon.

Yeah, this assumes pretty good familiarity with both complex numbers and linear algebra. Are there any particular questions you have, or do you think you just need to study more?

@@eigenchris absolutely just need to study more myself, the series is fantastic. thanks mate.

I love how approachable this series is. I have an undergraduate degree in mathematics, and feel like I have more than enough background to fully understand what you are doing without any experience with the physics.

I love this series. Thanks Chris

Thanks for all your work! All very educative.

The plot thickens. Thank-you!!!

Thanks for the videos! I've been interested in spinors for a while but haven't managed to get my head around them previously. Now with this series they are starting to make sense. I love your attention to detail.

Thank you soooooo much for making such clear video, I can't wait to see the next one. This series of videos solved the doubt I have when I was in physics department as a undergraduate student.

Very pretty topic, very informational video!

I disagree that the calculations get tedious if you only work with the abstract vectors rather than the matrices. After all, it just ends up as exp(θ/2 σ_x σ_y), since it's possible to show, using the algebraic properties covered, that (σ_x σ_y)² = -𝟙. It is also just very satisfying watching the limit definition of the exponential, when applied to _these objects,_ slowly increase the number of iterations.

Regardless, this was a pretty good thinly veiled introduction to Clifford Algebra. I have some personal gripes regarding "reflecting along a vector" rather than "reflecting across a plane," but they're just different geometric interpretations of the same algebra. The latter extends better when you have mirrors that don't pass through the origin or aren't flat, but for now that doesn't matter. It also more clearly shows that reflecting across a line is the same operation as rotating 180°, since the product of two planes is a rotation around their intersection, but if they're perpendicular, then that intersection is just a pure line.

I love you eigenchris

Excellent!!! Thank you!!!!

I really appreciate it thanks man

You really are godsent

Hmmmm, this actually reminds me of rotating vectors with unit quaternions, and it even makes sense since quaternions have many similar properties

Unit quaternions can be made to be equivalent to SU(2) matrices. I believe the equivalence is this, (although I may have some signs wrong): i = -σyσz, j = -σzσx, k = -σxσy.

Thank you! The fact that you don’t leave out any details makes this much more satisfying than anything else on the internet, yet still easier to follow than a typical text book. I feel like I need a refresher on linear algebra though. I know that 2 and 3 dimensional stuff can mostly be found by just plugging and chugging with algebra, but I know there are way more elegant proofs for arbitrary numbers of dimensions. I just never got to the later half of the course covering complex valued matrices. I don’t know if I can even find my old book. :(.

I don't think there's too much to know with complex numbers. As long as you know that i^2 = -1, and the complex conjugate (a+ib)* = a-ib, you're good. The Hermitian conjugate (dagger) is just the complex conjugate and the matrix transpose done together.

@@eigenchrisI’m talking about the places where you change the order of a product of complex matrices , or distribute operations - like taking the determinant or taking the squared magnitude - over a product.

@@marshallsweatherhiking1820 Ah, right. Yeah. I misread your comment. Some review of linear algebra is probably good. 3blue1brown has a series called "Essence of Linear Algebra" you can look at. I think it's 10 videos or so.

@@eigenchrishanks! I’ll check that out. I’ll just say I love the style of your videos, especially this series. I really hope you keep going!

You introduce every concept with a really concrete intuitive approach, but also fill in all the details to make it rigorous. This is the style I learn things. I really hate when people leave logical gaps or just hand-wave or gloss over details. It makes the videos longer and takes more effort, but also makes everything more satisfying as a whole. If some math is boring/tedious or something I already know I can fast-forward. Its great!

Edit: Ignore below, I forgot that det(kA) = k^n*det(A).

-I'm confused at --25:21-- about the double-covering rotations with +-A. It seems to me that either we fix det(A)=+1 by considering SU(2) in which case we never pick -A to do a rotation implying a single covering of SO(3), or we let det(A)=e^(i*t) by considering U(2) in which case rotations SO(3) are covered by an infinite amount of U matrices with unit complex determinants. Neither case gives a double covering. It seems to me like we would need to say "ignore all global phases, except global phases of -1".-

Thank you for the video, I have a question: at 10:40 we note that Vperp U = -U Vperp. I didn't have an immediate intuition, but after writing it down, I assume we define two vectors U and V to be perpendicular in the usual sense with the standard dot product, so that ux vx + uy vy + uz vz = 0. With that in mind, expanding U Vperp will only leave us with the antisymmetric mixed terms, which will pick up a minus sign when we swap the order of multiplication. Hence U Vperp = -Vperp U. Is this correct, or is there an easier way to see this?

Edit: Oh sorry, you show it right after it!

New spinors!

When 2:58 you talk about the Hemritian conjugate, * is usually the dagger for mathematicians. We use the top bar for conjugation

Yes. I'm using the notation usually used in physics and quantum mechanics.

Re 18:58 "We can get it as a hint to assume" does not mean "Hence we prove that"

thanks

The Pauli matrices span the Lie algebra su(2) and you showed they make mirror images when negative conjugated with a Pauli vector. The elements of SU(2) - the corresponding Lie group, act on the spinors on the left and right sides and rotate them to generate the spinor space. From math, su(2) is the tangent space of SU(2) at the neutral element 1. How do su(2) and SU(2) connected in physics? Why the Dirac equation is formulated with the basis of su(2) (and I2 in the gamma matrices) and not by elements in SU(2)? I know mathematically, I need elements in a tangent space to go with the partial derivatives like in the chain rule. I am looking for a physical explanation if you please. Thank you.

hmmm but why can we define a pauli vector at all, and expect the properties of 3D real vector to be carried over to it? maybe because the dimension of the space matches the number of pauli matrices (3)? can this also work with square matrices of different dimensions?

🎯 Key Takeaways for quick navigation:

00:00 🧠 The video shifts focus from physics to a purely mathematical view of spinors in both 3D space and 4D spacetime.

00:26 🎭 The video explores how to rewrite 3D vectors as 2x2 matrices called Pauli vectors and mentions that Pauli vectors can be factored into Pauli spinors.

01:24 🔄 Pauli matrices, or Sigma matrices, are introduced. They are essential for performing geometric transformations like reflections and rotations on vectors.

01:49 ✅ Sigma matrices have specific properties such as zero trace, Hermitian nature, and squaring to the identity matrix.

02:40 🗡️ The Hermitian conjugate of a matrix is defined, important for complex-valued matrices.

03:35 💡 All Sigma matrices square to the identity matrix, a critical property in their utility.

04:28 ⚖️ Sigma matrices have the property of anti-commutation, which leads to certain algebraic rules.

05:52 📐 Introduction to the Pauli Vector form, which provides an alternative way to represent a 3D vector using Sigma matrices.

06:48 📏 Pauli vectors also exhibit traceless and Hermitian properties.

07:42 🛡️ Conjugation operation by Sigma matrices is introduced, which flips signs of the components in a specific manner.

09:03 🎯 Demonstrates that negative conjugating a Pauli Vector by Sigma Z will reflect the vector along the Z-axis.

10:02 🧭 Pauli vectors can be reflected in any direction using a unit vector \( U \). Reflection is realized by negative conjugating the Pauli vector \( V \) with \( U \).

10:57 🔄 Reflections in vector spaces are the building blocks for rotations, which can be seen as a pair of reflections.

11:52 📐 If the angle of rotation is \( \theta \), the angle between the mirrors used for reflection is \( \theta/2 \).

12:48 🎯 Using Pauli matrices like \( \sigma X \) and \( \sigma Y \), you can create rotations in the XY-plane.

14:39 🌀 The video introduces a formula for rotating vectors in the XY plane, taking into account the angle \( \theta \) for rotation.

16:03 🧪 2-sided transformations with complex exponentials give results consistent with 3D rotation matrices.

16:59 📚 The transformations use Special Unitary 2x2 matrices, or SU(2) matrices, which are unitary and have determinants of 1.

17:54 📏 The determinant of a Pauli vector, which is negative of the vector's squared length, does not change after rotation.

19:40 🌐 The determinant of matrix \( A \) can be any complex number on the unit circle, enabling a wide range of transformations.

20:04 🔄 Multiple A matrices can lead to the same transformation due to their relation with different complex phase factors.

20:33 🎚️ The determinant of a specific A matrix can be forced to 1 by using a phase factor, eliminating the redundancies in the phase.

21:01 ➕ By manipulating the determinant and phase, A matrix is confirmed to have a determinant of +1.

21:27 🚫 Due to Pauli Vectors having zero trace, the result of the transformation also has zero trace.

21:55 🔍 A-dagger * A must equal the identity matrix, confirming that A-dagger equals A-inverse.

22:23 🎛️ A falls under the category of 2x2 UNITARY matrices, U(2), and 2x2 SPECIAL UNITARY matrices, SU(2).

23:15 📐 Rotations of Pauli vectors are performed using double-sided matrix transformations with SU(2) matrices.

23:39 🔄 All SU(2) matrices follow a specific form where the determinant equals 1.

25:03 ✌️ Every 3x3 rotation matrix in SO(3) has two corresponding matrices in SU(2) that perform the same rotation.

25:33 🌀 SU(2) matrices are used for geometric transformations on Pauli vectors, including reflections and rotations.

26:02 🔄 Pauli spinors rotate half as much as vectors and require two full rotations to return to their starting position.

Do you have pdf file explain everything

Great video, thank you! Many of these properties of Pauli matrices are triggering a long-lost memory of working with quaternions as an undergrad. Is there an isomorphism there or is my intuition off?

Your memory is perfectly fine; you are not crazy.

Quaternions were covered in this video without being named. Specifically, they were three products of 2 Pauli Matrices.

As the commenter above mentioned, the isomorphism is: i = -σyσz, j = -σzσx, k = -σxσy.

In quaternions, we think of "i" as rotating around the x-axis, but with Pauli matrices, we think of σyσz as rotating in the yz-plane. I'll probably make an optional "6.1" video where I go over this. But I'm not sure when I'll do it.

Also, a few videos down the line (may be video #11 or so), I'll start explaining how the quaternions and Pauli matrices are both examples of Clifford Algebras (also called Geometric Algebras).

@@eigenchris Those minus signs on each of the -bivectors- Pauli matrices look rather _sinister,_ don't they. 😉

@@eigenchris I am learning differential geometry now and the k-vectors are very interesting. So cant wait to see you set them into context!!

Hi Chris, do you plan on doing this in curvilinear coordinates at some point?

I'm not planning on it right now. I'm planning on talking about what the sigma matrices look like when you change basis in spin space, which might be somewhat relevant to that.

Is it obvious that just replacing that standard basis for R3 with the Pauli matrices "works" and preserves the structures of R3 that we want to preserve? I'm not even fully sure how to frame this question in a way that I'd know how to go about answering it, so I hope it makes sense

You can come up with 2x2 matrix versions of the standard 3D vector operations like the dot product and the cross product. If you look in the description, there's a link to a wikipedia page called "Spinors in Three Dimensions" that goes through it.

Having watch videos and buying books about Clifford algebra, the basis for Pauli Algebra has "Clifford" written all over itself :o

Yes, they're basically just a matrix representation of Cl(3). I'll be covering Clifford Algebras starting in video 11 or so.

@@eigenchris Thanks man. Hey, can I ask you what do you think of taking expected values of a joint probability function conditioned by a function of the random vector, for a geometric pespective? I'm not so good at math but just by intuition a make a formula for that problem. Maybe I can share you if you like.

@@rajinfootonchuriquen It's been a very long time since I've thought about probability theory... I'm not sure I'll be much use for sorting through it. You can feel free to share, but I can't promise I'll be very helpful.

@@eigenchrisThanks... is more like geometry really. Where can I share it to you?

@@rajinfootonchuriquen Sure.

There is quite a resemblence to Quaternions! When using a quaternion to rotate a 3D vector, there’s also a double-sided multiplication. Also, an angle-axis rotation in quaternion form has an angle halving part in its formula, and the formula resembles the part in this video a lot where there was cosine in the beginning and sine in the end of a formula.

What is the relation of SU(2) matrices and quaternions in 3D rotations? Is there a one-to-one mapping from one to the other? Are quaternions and spinors the same thing, or is one of those a sub-class of the other, or some other kind of relation?

Great video as always!

Yes. The one-to-one mapping is: i = -σyσz, j = -σzσx, k = -σxσy.

As I said in another comment, with quaternions, we think of "i" as rotating around the x-axis, but with Pauli matrices, we think of σyσz as rotating in the yz-plane. I'll probably make an optional "6.1" video where I go over this. But I'm not sure when I'll do it.

When I get to Clifford Algebras (may be video #11 or so), I'll explain how the Pauli matrices are really a Clifford Algebra in disguise. This includes the scalar 1, vectors σx σy σz, bivectors σyσz σzσx σxσy, and the trivector σxσyσz. The quaternions are the "even" elements of this algebra (the scalar and bivectors). SU(2) is what we called the "spin group" Spin(3), which is the set of rotations on a clifforld algebra. The Spin(3) group ends up being the unit quaternions, also called SU(2). We'll generalize this to spacetime with Spin(1,3), and arbitrary dimensions with Spin(n) and Spin(p,q).

I might be wrong, but at 12:12 the reflections are inverted in order. The upper text says "reflect along x-axis, then along y-axis" but what you show in your animation is first a reflection along the y-axis, then a reflection along the x-axis. The result is the same, but it's a little misleading I guess

When I say "reflect along the x-axis", I mean "reverse the x component", in other words, "treat the yz plane as a mirror".

@@eigenchris Oh I see, however, not gonna lie, that sounds pretty weird as a convention. Whenever you read "reflect along/over/across the (some) axis" in a book it usually means what I wrote in the previous comment, because "reversing the x component" does indeed mean "think about the y axis as a mirror", or again, "reflect along the y-axis" (not the x-axis). But I get it, sorry for bothering, I just thought it was a little off

@@andreapaolino5905 Yeah, I understand your confusion. The issue is, in higher dimensions like 4 or 5, specifying a "mirror" means you need a lot more variables. Like in 4D space with WXYZ axes, a mirror would be 3D, like the WYZ mirror. It's easier to just say "reflect long x-axis" instead of "reflect in WYZ mirror". This isn't too important now, but it will become more relevant when we get to higher dimensions in later videos.

@@eigenchris gotcha! thanks

when is gonna be your next video

Hopefully will be out by Sunday.

damn you, you couldnt state that i did not need to watch videos 1 to 5 for this video...

Sir Can you please explain algebraic geometry and give us a plan to study it and tell us what it is, its history, its applications and the requirements for studying it

He is going to talk about it in next section, called Clifford Algebra. They're pretty much synonimous.

@@linuxp00 algebraic geometry is completely separate from clifford algebras (you’re thinking of geometric algebras which again are very different)

@@kashu7691 OK...

I don't know anything about algebraic geometry so I won't be making a series on it. Geometric algebra will be part of this series.

@@eigenchris Thank you sir I am excited to study geometric algebra with you

When you mentioned that SU(2) matrices have 3 degrees of freedom, it made me think that somehow you should be able to somehow express SU(2) matrices as a linear combination of 3 basis SU(2) matrices...but this is obviously not the case. I glanced back at your first video and I have a hunch that this is related to those generator matrices...

Also, here you show rotating a vector by conjugating with bivectors, but when I learned about quaternions, they expressed rotation by first expressing a vector as a 0-real quaternion (effectively treating a vector as a bivector), then conjugating it with quaternions. When you show a one-sided multiplication at 13:40, the z component of the vector turned into a trivector, but a one-sided multiplication with a quaternion would still be a quaternion. Even if it's not rotation exactly, is there still a geometric view to this operation?

Finally (maybe not the best video to ask this on), at 14:39 in your introduction video, you show generator matrices for different spin types based off of "commutation relations". But it looks like that was still assuming we were working in "3d". Do different dimensions also have different spin types, and can we find generator matrices for rotations on any spin types in any dimension?

As you pointed out, you can't add SU(2) matrices directly, as the result will not be an SU(2) matrix. The generators end up being the bivectors σyσz, σzσx, σxσy, which you can add together in linear combinations. If you take an exponential e^(-σyσz*θ/2), since (σyσz)^2 = -1, by Euler's formula you get e^(-σyσz*θ/2) = cos(θ/2) - σyσz*sin(θ/2). Exponentiation is how you travel from the generators to the rotation matrices.

You can flip back and forth between quaternions and bivectors using: i = -σyσz, j = -σzσx, k = -σxσy.

The reason I was working in 3D is because real life space is 3-dimensional. The group relevant for rotations in 3D is SO(3), and also its double-cover Spin(3), which is SU(2). The spin is not related to the dimension of physical space. Instead it's related to the size of the matrix we use to represent the Lie Algebra of 3D rotations. 1x1 matrix gives spin-0; 2x2 matrix gives spin-1/2; 3x3 matrix gives spin-1; nxn matrix gives spin (n-1)/2.

We can also upgrade to special relativity with the Lorentz Group SO(1,3), whose double-cover is Spin(1,3), aka SL(2,C), and get new generations for spin-0, spin-1/2, spin-1, etc.

Mathematically speaking you could do this for any dimension d and look at the Spin(d) group, but in physics we're generally only interested in Spin(3) and Spin(1,3).

I did some more studying and I need to ask you to clear something up Chris. Do we know opposite spin states are orthogonal, and the states are spinors i.e. points on the conplex projective line. So far, so good. However, it is a fundamental concept that wavefunctions can be expressed in a basis. For instance, a spin in the positive x-direction can be expressed as a linear combination of spins in the positive and negative z-directions. Their probabilities are equal. But after doing a bit of background studying of projective geometry, I learned projective spaces aren't vector spaces at all, and therefore points cannot be decomposed into a basis. I do not see how decomposing the x-spin is therefore a mathematically valid thing to do. There is the fact that the z-spins are an equal distance away from +x in terms of the Fubini-Study metric (i.e. an inner product, which /is/ well-defined for the complex projective line), but that still doesn't justify what we usually do in QM--or does it?

I think it's typically said that quantum mechanics takes place in a "projective hilbert space". It's not technically a vector space because it doesn't have a zero vector (the origin is excluded from projective spaces). But we can still do things like adding points and multiplying them by scalars.

I haven't thought about this too much beyond the above explanation, and I'm not familiar with the Fubini-Study metric. But I think any linear algebra type of operations you need to do in Projective Hilbert space can be "imported" from the original Hilbert Space and used as needed.

@@eigenchris I think I should study projective Hilbert spaces more then. Although I would still expect this to work for the simple case of the Bloch sphere, somehow; the Hilbert space is a vector space inhabited by continuous functions. By the way the Fubini-Study metric is simply the metric on the Riemann sphere, if I understand it correctly. I assumed you were familiar but it's still slightly out of my league too. Thanks for your comments as always.

Just to get back to my own question here: the states still exist in non-projective space, but the only part that is real is what you obtain when you project out the phase. This why linear combinations can still be taken, and why phases have some real effect such as with Rabi oscillations. I made the error of assuming that the only thing that exists is the projected points, but this is apparently not so. In other words, the projection happens "after" the linear algebra. I am not 100% sure on this but it does answer my question for now.

please rename this series to "spinors for beginnors"

😂

👍

Almost First

But why does a 3-dimensional vector correspond to a 2x2 matrix? And why those sigmas specifically? Why are you looking for those properties in the sigmas and how would you justify equating them to î ĵ k̂? It feels to me a bit like «here's the rules, just memorize them». I don't know if it's just me, but I don't get how and why exactly we got there.

I find the rest of the video understandable, that is, I find the implications (like: if A then B) understandable, but I don't get the premise (the A).

You could try watching video 11 in this series. These matrices are used to give usna "Geometric Algebra", where we can use use not only vectors, but bivectors (planes) and trivectors (cubes) as well.