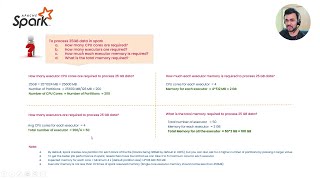

Spark [Executor & Driver] Memory Calculation

HTML-код

- Опубликовано: 6 сен 2024

- #spark #bigdata #apachespark #hadoop #sparkmemoryconfig #executormemory #drivermemory #sparkcores #sparkexecutors #sparkmemory

Video Playlist

-----------------------

Hadoop in Tamil - bit.ly/32k6mBD

Hadoop in English - bit.ly/32jle3t

Spark in Tamil - bit.ly/2ZzWAJN

Spark in English - bit.ly/3mmc0eu

Batch vs Stream processing Tamil - • Data - Batch processi...

Batch vs Stream processing English - • Data - Batch processi...

NOSQL in English - bit.ly/2XtU07B

NOSQL in Tamil - bit.ly/2XVLLjP

Scala in Tamil : goo.gl/VfAp6d

Scala in English: goo.gl/7l2USl

Email : atozknowledge.com@gmail.com

LinkedIn : / sbgowtham

Instagram : / bigdata.in

RUclips channel link

/ atozknowledgevideos

Website

atozknowledge.com/

Technology in Tamil & English

#bigdata #hadoop #spark #apachehadoop #whatisbigdata #bigdataintroduction #bigdataonline #bigdataintamil #bigdatatamil #hadoop #hadoopframework #hive #hbase #sqoop #mapreduce #hdfs #hadoopecosystem #apachespark

![Spark Submit Cluster [YARN] Mode](http://i.ytimg.com/vi/3c62-F6bu5k/mqdefault.jpg)

![Spark Submit Cluster [YARN] Mode](/img/tr.png)

![Spark [Executor & Driver] Memory Calculation{தமிழ்}](/img/1.gif)

Sometime question from interviewer that what is the data size of your project and how you do this memory allocation based on data size? Could you please make a video to explain those real cases depend upon data size

This is a real A to Z calculation for memory. Thanks for the useful video.

This is the best video I have watched on Executor Memory calculation. Thank you brother.

What if your input size keeps changing? On one day if it's 1GB and another day it's 1TB, would you still suggest the same configuration? Can there be a correct configuration in such cases?

Hi, One clarification

In real time scenario, we need to decide the resource based on the file size we are going to process.

Can you please explain how to explan how to determine the resource based. On the file size

Hi anna, Vanakkam. My doubt is... How can this calculation be suitable for all the jobs.. Taking the same cluster configuration which you explained in the video, for all the jobs even though the size of data handling will differ from job to job, still we will calculate according to whole cluster configuration...please explain I am really confused.

Thank you so much. It's very clear to me

Hi Sir,

Can you please explain, What is the practical Hadoop cluster size in projects of companies?

How do the dataframe partitions impact the job?

Could you explain When do I increase number of executors and when do I increase no of cores for a job?

If you want to run more task parllelly then you could increase core size..

If my spark reads data from event hub what is the recommended partitions count at Event hub. if partitions count is 10 only one driver connect to all partitions and sends to worker nods?

My core node in emr has 32 gb memory and 4 cores, but when checking spark ui, i can see only 10.8gb and 1 core being used. why is that?

Do we need to consider existing running jobs in prod environment while giving these parameter values for our spark application. Thanks in advance.

Still a question for which I am not able to get proper answer.

Suppose you have 10 gb data to process, using data volume scenario , please explain number of executor, executor memory, driver memory.

I need your cluster / node configuration like RAM , Core each nodes and what is your cluster size ?

Thanks for the detailed explanations 👍

What if... I have a stand alone mode... And I have 16core and 64gb of ram.. how to calculate executor and driver memory

you have data engineering course notes pdf

Hi, How minimum memory is 5GB? Please explain?

Thanks for info.

So i have 1 master and 1 worker with 4cpu and 16gb and available memory is 12gb

So when i submit spark job on yarn with driver and exector memory 10gb and core as 4

Its not able to assign the passed values.

Inturn 1 core and 5 or 8 gb is assigned for executor

Any help would be helpful

HIi, I have an Interview scenario - A spark job is running on a cluster with 2 executors and there are 5 crores per executor. if the transformation takes 1 min per partition how long does the job run for a dataframe with 20 partitions? Please advice.

2 mins

@@nnishanthh Hey buddy, are we considering one partition as one task? If yes, why?

@@snehilverma4012 Each executor processes 5 partitions concurrently.

Each partition takes 1 minute to process.

Given that there are 2 executors and each executor processes 5 partitions concurrently, it means all 20 partitions can be processed simultaneously.

So, the total runtime of the job would be equal to the time taken for the slowest executor to finish processing all its partitions.

Since each partition takes 1 minute to process, and each executor can process 5 partitions concurrently, the slowest executor would need to process all 20 partitions in:

20 partitions / 5 partitions per minute = 4 minutes

So, the job would run for a total of 4 minutes.

Thanks for this nice video, have a question.

suppose i have worker 2 nodes each having 4 core and 14GB memry

scenario 1:

by defualt databricks creats 1 executor per node, it means each executor will have 4 cores and 14 GB, hence can run 4 parallel tasks

total 8 parallel tasks.

Scenario 2:

If i configure databricks to have 1 executor per core and configure 3 GB memory per executor

I can have 8 executor in total, which means 8 task can run in parallel, each will have 3 gb

both ways i can run max 8 tasks in parallel, on what basis i should choose my distribution model to get optimal perforance?

anyone, please explain this scenario, having the same confusion!!

I think both scenarios gives same performance...first scenario 1 executer has 4 core & 14gb Ram running 4 tasks parllelly & 2nd scenario we are reducing memery and core for executer(each executer having 1core 3gb ram)running 1 task only then 4 executer running 4 task parllelly ..so both give same performance...but I am not sure ...

In scenario 2, you are going for thin executors approach (min resources per exec) and having 1 core/exec won't give u multi-threading and also if using broadcast variable you'll need more no. of copies cuz each exec needs separate copy. Ideally no. of cores per executor is 1-5 cuz more than 5 cores might cause sysytem to suffer throughput.

excellent

why not dynamic allocation

DRA is Not recommended in the PROD Env , since many teams deploy many jobs , so sometimes jobs will over utilize the cluster

@@dataengineeringvideos but this can be achieved by dividing your root queue into multiple queues and give allocation to these. This will ensure to get the required resources to the applications running in high priority queue.

Yes we do have sub queues with manual memory config instead of DRA

I will not recommend DRA in sub queues too

For example The sub queue A is over utilized then it try to use other sub queue resource B , that's how yarn queues work in this case enabling DRA is not a good idea

@@arvindkumar-ed4gf sorry I didn't get you.can you explain with an example.Thanks

@@dataengineeringvideos if we give the maximum number and minimum number of executors, there is nothing wrong with dynamic allocation. I am not sure about you, we have at least 60+ jobs processing TBs of data every day with dynamic allocation and never saw any problem.

I guess you are in south part of India.