An introduction to the Random Walk Metropolis algorithm

HTML-код

- Опубликовано: 26 июл 2024

- This video is part of a lecture course which closely follows the material covered in the book, "A Student's Guide to Bayesian Statistics", published by Sage, which is available to order on Amazon here: www.amazon.co.uk/Students-Gui...

For more information on all things Bayesian, have a look at: ben-lambert.com/bayesian/. The playlist for the lecture course is here: • A Student's Guide to B...

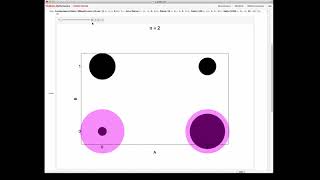

Finally, visuals for MCMC! Highly illuminating, thank you.

This video is 10000x better than the equations in the class.

Right? I don't understand how actual lecturers can be THAT terrible at conveying knowledge. Its like they don't want people to understand it.

Finally found out why its called Monte Carlo. This is the best explanation of the algorithm I have ever seen. Thanks for this.

Beautifully explained

Best explanation of MH on RUclips. Thank you!

Thanks Ben. This is a really clear visual representation of what the algorithm is doing and how it works in principle. Excellent stuff!

I used this for my Bachelors thesis to simulate ultracold fermions in a harmonic trap, which was a replication of real expermients! Thank you for explaining, I had forgotten what it did...

thank God there's a video about this

Great explanation. Thank you

the simulation is so helpful! thanks

Best one on RUclips, thanks a lot.

Subscribed. Good voice, good explanations !!

Great video. Way easier to understand than my uni lectures.

Very insightful.

Brilliant honestly.

Are the Mathematica programs you used in the video available? I particularly liked the last one where you showed a more complicated surface. I also just ordered your book, are there available with that?

awesome

I have a question. Suppose rather than having just one value theta, I have multiple values [A,B,C] in my state. Each variable can only have four values [0,1,2,3]. How would I choose a new state from the previous one? Would I calculate a new value for each variable and call it a proposed state and then calculate the value for the complete system?

probably too late, but for a multi-variable model you want to use a gibbs samples

Don't gaps in the true distribution skew the samples to the borders of these gaps because the random walk is less likely to cross the gap, especially with a low sigma in the jumping distribution?

👏👏👏

Maybe a dumb question, but at 5:46 aren't you using the unknown distribution to calculate r? Isn't the black line on the graph the unknown distribution you are trying to estimate?

No thats a good question. The way I understand it is that all you need to compare is the ratio of the numerator for Bayes rule. And you sample through different values of the parameter (theta). You basically run through lots of different possible parameter values and the way it walks across the graph is when it hits a value of the parameter that corresponds to the true posterior distribution. Most of the guesses of the parameter are wrong and don't lead to anything at all which is why the animation at around 8 minutes has many more wrong guesses than correct ones.

The numerator of Bayes's rule is what dictates where those true parameter spots are. The denominator decides the height of it. This sampling method estimates the height of them which is decided by the denominator. So for your question of r. All we know is the numerator, not the denominator.

I don't get where the likelihood term and the prior term come from. Here we assume they exist. What is an example of a practical application where we have these two terms but don't have the posterior?

@Siarez the difficult part in calculating the posterior is usually the denominator (marginal distribution). This algorithm uses the ratio of unnormalized posteriors, so the cumbersome marginal distribution cancels out.

Yes, the marginal can be quite costly to compute, as you have to integrate out so many (potentially) unknown dimensions.

I agree with you. There's a disconnect in the explanation in the video. The video mentions that the prior can be anything (just to have a starting point) but doesn't explain where the likelihood comes from.

@@payam-bagheri The likelihood is computed in the usual way from your data and proposed data generation model. This video is about how to sample from your unnormalized posterior once you obtain an expression for it using the likelihood x prior.

where can i find the code for this?

Metropolis 1927 illumination= moloch

Conspiracy of dunges = owl of minerva =satan

ước gì có tiền ăn tết