LLM Chronicles #4.6: Building an Encoder/Decoder RNN in PyTorch to Translate from English to Italian

HTML-код

- Опубликовано: 9 сен 2024

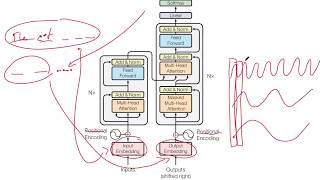

- In this lab we'll use PyTorch to build a encoder/decoder RNN for language translation, similar to the model described in the 2014 paper "Sequence to Sequence Learning with Neural Networks” by OpenAI's chief scientist Ilya Sutskever. Our RNN layers will use LSTM (Long Short-Term Memory) cells.

🖹 Lab Notebook: colab.research...

🕤 Timestamps:

00:10 - Sutskever's Paper on Language Translation with RNNs

00:27 - Loading the Dataset, Tokenizer and Vocabulary

02:40 - PyTorch Dataset and DataLoader Objects

05:20 - Encoder / Decoder RNN in PyTorch

08:15 - Inference / Forward Pass for Translation

10:10 - Training Loop, Teacher Forcing

15:54 - Using/Evaluating the Trained Model

19:15 - Bi-Directional RNN for the Encoder

22:10 - Using/Evaluating the Trained Bi-Directional RNN

23:29 - Comparing our model to Sutskever's Paper

References:

- "Sequence to Sequence Learning with Neural Networks", arxiv.org/abs/...

Don't forget to like, share, and subscribe for more neural network adventures!

🚨 Clarifications & Corrections 🚨

1. At 25:04 - The size of the model is in millions of parameters, not Megabytes!

Thanks for starting with basics❤

Thanks for the support! Next episode is on Attention, we'll add that to this model getting a pretty good outcome.