DDPS | ‘GPT-PINN and TGPT-PINN

HTML-код

- Опубликовано: 20 сен 2024

- DDPS Talk date: April 5, 2024

Speaker: Yanlai Chen (UMass Dartmouth, yanlaichen.reaw...)

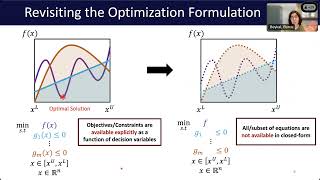

Physics-Informed Neural Network (PINN) has proven itself a powerful tool to obtain the numerical solutions of nonlinear partial differential equations (PDEs) leveraging the expressivity of deep neural networks and the computing power of modern heterogeneous hardware. However, its training is still time-consuming, especially in the multi-query and real-time simulation settings, and its parameterization often overly excessive.

In this talk, we present the recently proposed Generative Pre-Trained PINN (GPT-PINN). It mitigates both challenges in the setting of parametric PDEs. GPT-PINN represents a brand-new meta-learning paradigm for parametric systems. As a network of networks, its outer-/meta-network is hyper-reduced with only one hidden layer having significantly reduced number of neurons. Moreover, its activation function at each hidden neuron is a (full) PINN pre-trained at a judiciously selected system configuration. The meta-network adaptively “learns” the parametric dependence of the system and “grows” this hidden layer one neuron at a time. In the end, by encompassing a very small number of networks trained at this set of adaptively-selected parameter values, the meta-network is capable of generating surrogate solutions for the parametric system across the entire parameter domain accurately and efficiently.

Time permitting, we will discuss the Transformed GPT-PINN, TGPT-PINN, which achieves nonlinear model reduction via the addition of a transformation layer before the pre-trained PINN layer.

Yanlai Chen received his Ph.D. in Mathematics from School of Mathematics, University of Minnesota, in 2007. He then worked as a Postdoctoral Researcher at Brown University before joining the Department of Mathematics at University of Massachusetts Dartmouth in August 2010 where he currently serves as a full professor. Dr. Chen’s research has been supported by the NSF, the AFOSR, and by ONR via UMassD's MUST program. His research interests are in numerical analysis and scientific computing, in particular, finite element methods, reduced basis methods and other fast algorithms, uncertainty quantification, design and analysis for machine learning algorithms. He has graduated five doctoral students, and initiated the UMassD's ACCOMPLISH program via an NSF grant to provide students in need financial, social, and curricular support.

DDPS webinar: www.librom.net...

💻 LLNL News: www.llnl.gov/news

📲 Instagram: / livermore_lab

🤳 Facebook: / livermore.lab

🐤 Twitter: / livermore_lab

About LLNL: Lawrence Livermore National Laboratory has a mission of strengthening the United States’ security through development and application of world-class science and technology to: 1) enhance the nation’s defense, 2) reduce the global threat from terrorism and weapons of mass destruction, and 3) respond with vision, quality, integrity and technical excellence to scientific issues of national importance. Learn more about LLNL: www.llnl.gov/.

IM release number is: LLNL-VIDEO-862758