RAGAS: How to Evaluate a RAG Application Like a Pro for Beginners

HTML-код

- Опубликовано: 30 май 2024

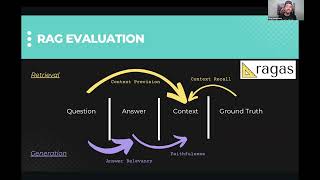

- Welcome to an in-depth tutorial on RAGAS, your go-to framework for evaluating and testing retrieval-augmented generation (RAG) applications. 🌐🛠️ Today, we're diving into how RAGAS can enhance your AI's performance by precisely assessing both the retrieval and generation aspects of language models. Evaluate a RAG application. RAGAS: How to Evaluate a RAG Application Like a Pro for Beginners

🔹 What is RAGAS?

RAGAS stands for Retrieval-Augmented Generation Application System, designed to test the effectiveness of language and embedding models by simulating real-world queries and assessing the responses.

🔹 Tutorial Overview:

Installation: Easy steps to get RAGAS up and running on your system.

Core Components: Understanding the critical components of RAGAS, including data ingestion, chunk conversion, and response generation.

Practical Demonstration: Walkthrough of a sample testing scenario to show how you can implement RAGAS to evaluate your AI models.

Evaluation Metrics: Detailed explanation of key metrics like faithfulness and answer correctness, ensuring your model's outputs are accurate and reliable.

🔹 Why Watch This Video?

Enhance AI Performance: Learn to pinpoint strengths and weaknesses in your AI models.

Streamlined Testing: Discover how to systematically test and improve the retrieval and generation capabilities of your language models.

🔔 Don't forget to subscribe and hit the bell icon to stay updated on all things Artificial Intelligence. Like this video to help it reach more AI enthusiasts like you!

🔗 Resources:

Sponsor a Video: mer.vin/contact/

Do a Demo of Your Product: mer.vin/contact/

Patreon: / mervinpraison

Ko-fi: ko-fi.com/mervinpraison

Discord: / discord

Twitter / X : / mervinpraison

Code: mer.vin/2024/05/ragas-basics-...

Timestamps:

0:00 - Introduction to RAGAS

0:38 - How RAGAS Works: From Data Ingestion to Response Generation

1:40 - Starting with RAGAS: Installation Guide

2:46 - Configuring RAGAS: Step-by-Step Code Walkthrough

3:47 - Evaluating AI with RAGAS: Understanding Metrics

5:30 - Practical Example: Running RAGAS in Action

6:01 - Results Analysis: Interpreting Output Data

7:31 - What's Next? Upcoming Detailed Tutorials on RAGAS

#RAGAS #Evaluate #RAG #Application  Хобби

Хобби

Impressive and a very clear cut video, waiting for the upcoming video, post soon🤗

Nice one. Finally a llm testing modul. This could be a possibility to evaluate, giving them(Agent, model, preprompts, arguments) a score and using these scores for a scoreboard(like a q table in ml terms) so a llm program could keep track of what Agent with which arguments works how good. Because I'd like to know which Agent could be the best but its not easy to keep track in this fast changing field.

Thanks for the info!

🙏

This is great for a newbie like me. Thanks!

Thank you

Sir, can we use this RAGAS method to evaluate responses using the Gemini-Pro API?

How do we create the sample data? In the existing RAG app we can log context, query and answer and ground truth. This would be done manually and then create a JSON is this right understanding?

Great job

Thanks

Yes please continue this RAGAS series. I’d like to know for example if we used several pdfs, how would we code that? Also, RAGAS is only for text data and not tables/images right?

everything is perfcet, but please make a version for local LLM

WOW, this is new 🤩

Thank you

will the uploaded documents be available publicly?

I don’t think ground truth is generated by RAGAS, as subject matter experts, we have to provide ground truth

RAGAS has another feature to generate questions and ground truth from a given content. I’ll create a video on how to do that

@@MervinPraison please do!

@@MervinPraison Can you please share how we can use it for real world deployment post go live? We might not have access to ground truth labels when a user asks a query