- Видео 16

- Просмотров 90 742

John K. Kruschke

Добавлен 22 июн 2009

Some Bayesian approaches to replication analysis and planning

A talk presented at the Association for Psychological Science, Friday May 27, 2016. This video is a pre-recording while sitting at my desk; the actual talk included some spontaneous additions about relations of the material to previous speakers' talks, and a few attempts at you-had-to-be-there humor. For a snapshot of the speakers, see doingbayesiandataanalysis.blogspot.com/2016/05/some-bayesian-approaches-to-replication.html

Просмотров: 4 243

Видео

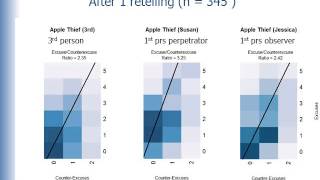

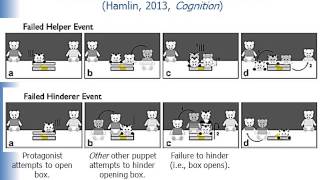

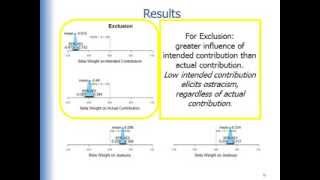

Perspectives on Moral Judgment. Part 4B: Narratives and morality (cont.)

Просмотров 36310 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Perspectives on Moral Judgment. Part 4: Narratives and morality

Просмотров 42110 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Perspectives on Moral Judgment. Part 1: The sense of right and wrong; universal moral foundations.

Просмотров 3 тыс.10 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Perspectives on Moral Judgment. Part 2: Innate moral senses; the Remak seminar award.

Просмотров 64410 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Perspectives on Moral Judgment. Part 3: Punishment and morality.

Просмотров 47310 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Perspectives on Moral Judgment. Part 5: Humor and morality.

Просмотров 53510 лет назад

Perspectives on Moral Judgment. (Remak Seminar Award Talk, March 4, 2014.) Part 1: The sense of right and wrong; universal moral foundations. Part 2: Innate moral senses; the Remak seminar award. Part 3: Punishment and morality. Part 4: Narratives and morality. Part 5: Humor and morality.

Precision is the goal, Part 1

Просмотров 10 тыс.10 лет назад

Talk at U.C. Irvine, March 14, 2014. Part 1: Rejecting null is not enough, we also need an estimate and its precision. Bayesian estimation supersedes frequentist confidence intervals and "the new statistics". Part 2: Bayesian estimation supersedes "the new statistics." @2:35: Two Bayesian ways to assess a null value. Highest density interval with region of practical equivalence. Bayesian model ...

Precision is the goal, Part 2

Просмотров 4,2 тыс.10 лет назад

Talk at U.C. Irvine, March 14, 2014. Part 1: Rejecting null is not enough, we also need an estimate and its precision. Bayesian estimation supersedes frequentist confidence intervals and "the new statistics". Part 2: Bayesian estimation supersedes "the new statistics." @2:35: Two Bayesian ways to assess a null value. Highest density interval with region of practical equivalence. Bayesian model ...

Precision is the goal, Part 3

Просмотров 2,5 тыс.10 лет назад

Talk at U.C. Irvine, March 14, 2014. Part 1: Rejecting null is not enough, we also need an estimate and its precision. Bayesian estimation supersedes frequentist confidence intervals and "the new statistics". Part 2: Bayesian estimation supersedes "the new statistics." @2:35: Two Bayesian ways to assess a null value. Highest density interval with region of practical equivalence. Bayesian model ...

Precision is the goal, Part 4

Просмотров 2,1 тыс.10 лет назад

Talk at U.C. Irvine, March 14, 2014. Part 1: Rejecting null is not enough, we also need an estimate and its precision. Bayesian estimation supersedes frequentist confidence intervals and "the new statistics". Part 2: Bayesian estimation supersedes "the new statistics." @2:35: Two Bayesian ways to assess a null value. Highest density interval with region of practical equivalence. Bayesian model ...

Doing BEST: Installing and running Bayesian estimation software

Просмотров 4,8 тыс.11 лет назад

How to install and run software that accompanies the article, Bayesian estimation supersedes the t test, Journal of Experimental Psychology: General.

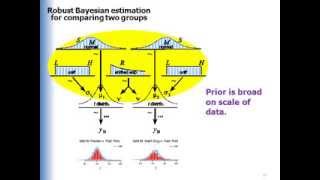

Bayesian Estimation Supersedes the t Test

Просмотров 28 тыс.11 лет назад

Highlights from the JEP:General article of the same title. Talk presented at the Psychonomic Society, Nov. 2012. Contents include a brief overview of Bayesian estimation for two groups, and three cases contrasting Bayesian estimation with the classic t test.

Bayesian Methods Interpret Data Better

Просмотров 26 тыс.11 лет назад

Talks at Psychonomic Society Special Session, Nov. 2012. Contents include a very brief overview of Bayesian estimation and decision rules, then a look at sequential testing of accumulating data, the goal of precision (as opposed to rejecting null), and multiple comparisons (with shrinkage in hierarchical models).

Bayesian Estimation Supersedes the t Test (SEE REVISED VERSION AT http://youtu.be/fhw1j1Ru2i0 )

Просмотров 3,6 тыс.11 лет назад

SEE REVISED VERSION AT ruclips.net/video/fhw1j1Ru2i0/видео.html (new ending)

Cup Game, from your perspective, with counts

Просмотров 1,2 тыс.15 лет назад

Cup Game, from your perspective, with counts

Sir ,I have to need Bayesiantools pakacge ..but it's not installing in r studio ...tell me how to install in r studio ..have a nice day

how to explain the green numbers in the plot 3.6%<0<96.4%? what does it mean?

That's just the percentage of the distribution below 0 and above 0. That annotation could have been omitted.

thanks for this vedio. Do you have any ideas/ papers of how can we use prior in logistic or survival analysis by R?

Hello , I think the big problem for bayesian is the prior and how to choose them. I agree with you that bayesian gives more inference compared to frequents as in bayesian you can compute the magnitude of the Null hypothesis. please I would like to know any vedios that explains how to choose the best prior.

For a conceptual explanation of how to set priors, see this open-access article: Bayesian data analysis for newcomers, link.springer.com/article/10.3758/s13423-017-1272-1 In particular, see the section headed "Prior distribution: innocuous, hazardous, beneficial". For a detailed example of setting priors for a realistic yet simple application, see the Supplementary Example for this open access article: Bayesian analysis reporting guidelines, www.nature.com/articles/s41562-021-01177-7 The Supplementary Example is available here: osf.io/w7cph/

@@JohannZahneknirsche Thank you a lot, Prof Kruschke, The only reason that does not make me use the Bayesian approach is the possibility of having a bias in the analysis, the funny part is as I asked myself why the frequency approach does not have a bias as many researchers state, since sometimes the frequency models may not be the correct approach. I asked my professor if I can use the Bayesian approach in my research and he encouraged me to work only with the traditional approach he explained the traditional approach works very well in machine learning compared to Bayesian, but I am curious about how to choose the correct prior, especially for logistics and survival analysis. I want to read more about the bayesian approach and I may buy one of your books soon🙂 I encouraged you to make new videos prof, I found your explanation very clear and let us make this channel active again. Best regards, Amin

So, is a distribution used in the model?

Looks good. I might look over the r code, and rewrite it in Julia.

Thank u

This is fantastic! Very well explained

this video really help me for my demo :)

Dear Prof. Kruschke, thanks a lot for this incredible presentation! One of the rare presentations that give an easy and intuitive explanation to a complex matter.

I have a question about interpreting the results from the example with small N. I see that the 95% HDI includes 0, so it makes sense that we would not reject the null hypothesis. However the green text in the output reads "3.6% < 0 < 96.4%". What does this refer to? My previous assumption was that 3.6% referred to the entire distribution and that for any x < 5%, x < 0 < 1-x would indicate a significant difference in means.

For the baseball example: would I be correct to say that the prior distribution for the entire team = Beta(all.hits+1, all.misses+1)?

Hi John, thank you so much for this. It is very useful. I have a question regarding BESTexample.R I downloaded you package an followed instructions, and ran the above example sucessfully. I then tried to run it on my own data, but first I thought I'd fiddle the example data. I divided one of the data vectors by ten y2=y2*0.1 and re-run the script, expecting the analysis to indicate that the estimate for muDiff was non-0 with high credibility. But [HDIlow,HDIhigh] for muDiff includes 0; why is this? Do I need to give different initial prior values?

Dear Linford: Have you looked at the new data? Are the sample means closer together than they were before? (There should be no need to change the priors, because the scripts make the priors broad relative to the scale of the data.)

@@JohannZahneknirsche Hi John, thanks for your reply. The sample means are much further apart than they were (~90 units), which is why I was expecting [HDIlow,HDIhigh] for muDiff to not include 0.

Any advise on this John?

Where in the DBDA2Eprograms does the BEST.R file reside? Also the functions associated with the "workspace" at 8:00 are not accessible and RStudio only shows me Environment: "package 'BEST.R' is not available (for R version 3.5.1)".

wow I'm watching this in 2018 thank you . great video

... the best fitting t- and N- distributions have different means?? (2:40)

Yes.

Excellent video John! I'm a proud owner of your book. In the video you suggest that neither Bayes nor NHST should be regarded as more 'correct'. However, we all know that NHST is inherently flawed (stopping rules, etc.) and that Bayes is logically the correct choice- which is important to emphasize given that they lead to two different conclusions for the examples you present. Why be shy about this? ;)

The two approaches ask different questions. Bayesian asks about credibilities of parameter values given observed data, frequentist asks about error rates give hypothetical worlds. Neither question is necessarily the "correct" question to ask, but most people intuitively think they're asking the Bayesian question because they interpret the result in a Bayesian way.

Thanks for the reply, John. Yes, I agree that the two approaches ask different questions, and, as your examples nicely illustrate, can yield different conclusions to inform our decisions. Given the inherent flaws in NHST and the logical coherence of Bayesian inference, which one would you trust more to make decisions? I think I know the answer ;)

so valuable. many thanks

thanks for this.

Thank you, these are great.

Are there best practice guidelines for selecting an appropriate ROPE?

How can I deal with NA's in my data source? File is a .csv... I always get an error. It works with a file without NA's!

Sorry but the scripts assume you have no missing data. If your missing values are "missing completely at random" such that there is no systematic reason why some values are missing and not others, then simply delete the rows with missing values. Otherwise you have to model the missingness, which is a completely different ball game.

1) Is my initial prior necessarily normally distributed around zero? How can I defend myself against a biased prior? Given I have a flawed sample and produce a huge effect as a fluke, wouldn't this influence my posterior dramatically and in turn my next prior? Given that effect is a fluke wouldn't I need several other replications to converge back to a Null effect? 2) I am wondering where is the difference between using a the posterior of prior study to do a power calculation using bayesian methods for a replication to using the effect size estimated in a prior experiment to do power calculation using frequentist approaches? Looks the same to me the only difference being one using a distribution the other using a point estimate. I don't see the 'technical' advantages of the bayesian approach over the frequentist approach. The main points for replications stand: - many replications - with a lot of power - prior commitment of the analysis techniques and approaches But those work for both (Bayesian & frequentist) approaches.

Felix, thanks for your interest. 1. The prior is explicit and must make sense to a skeptical audience. You can always show what happens when using different priors to satisfy the interest of skeptics; that's often called a "sensitivity analysis". Diffuse priors for parameter estimation have little impact on the posterior distribution. But priors for Bayes factors can be highly influential. In any case, strongly informed priors (using prior information everyone agrees on, such as in meta-analysis) can be very helpful to leverage small data sets. 2. For power analysis, when using a distribution of hypothetical values, the power estimate integrates across the uncertainty in the effect size. The difference is that small hypothetical effect sizes inflate the needed N more than large hypothetical effects sizes reduce the needed N. 3. The talk was not intended to contrast frequentist and Bayesian side by side to illustrate their differences. The talk merely tried to show some Bayesian approaches to the issues. For a comparison of frequentist and Bayesian, try this manuscript: ssrn.com/abstract=2606016

John K. Kruschke Thank you. That was very informative. Especially 2 is interesting to me.

That's very interesting! I'm still new to bayesian analysis, but I'm every day more convinced of its advantages. I'm working a lot with INLA, specifically for spatial hierarchical models and I love it! However, I have one question about this video. Is there methods like a power analysis to define the optimal N for adequate precision? In my area, marine ecology, the logistics can be very expensive, so defining a optimal N for the samples would be great when developing projects.

Ops, looks like I skipped this part on the video. I also have the book but haven't reached this part yet!

Thanks for your interest. Hope the book serves you well!

You speak of a BF>3, but in your plot you show log(BF) with a reference line at 1. I assume log means base10 so 10^1 = a BF of 10, not 3? And where does the 3 come from anyway?

+John Doe Actually it's natural log, for which log(3) = 1.1 approximately. The threshold for BF at 3 is just a conventional decision threshold like .05 for p values. Various proponents of BF's suggest different thresholds like 3 or 6 or 10, as applied considerations suggest. All of the details of the examples are in Chapter 13 of DBDA2E.

John K. Kruschke Thank you.

John K. Kruschke When doing random coin tosses I have noticed that sometimes there is a strong bias in the first ~20 trial, despite p=.5. The p value is of course low enough to reject the null, but the bayes factor also is extremely high and the HDI is clearly outside the ROPE. Clearly none of these can be used as a stopping rule.. right? Now one could also include the width of the HDI, but that means I'd need to do at least a few hundred coin tosses to make a conclusion... there's no free lunch I guess.

+John Doe Right, in small samples there is huge variability from one sample to the next. Because of this, some proponents of optional stopping require a minimal sample size before optional stopping is allowed. For example, you have to collect at least 20 data points, and only after that can you check for stopping.

John K. Kruschke Ok, then why do we use θ=0.5 for the null hypothesis, which is like using a betapdf(infinity, infinity) prior for H0? If we, for example, have done 20 flips then why don't we contrast H1 (uniform prior) with a H0 with a prior that is the average posterior of countless 20-flip fair coin experiments with the same uniform prior instead of taking the posterior of an endless run of fair coin flips (which results in the above infinity betapdf)? In my 20 flips example this averaged posterior, that will be used as prior for H0, can be approximated with a betapdf(5.69, 5.69). So using this as prior for H0 I get: The max log bayes factor for 20/20 or 0/20 heads changes from 10.8 to "just" 4.2. And for 15/20 it changes from 1.2 to a barely positive 0.01 At 10/20 it is less negative than with the original H0 .. of course! With just 20 trials there is large uncertainty even with a perfectly fair coin. Does that make sense to you?

Should we really frequent frequentists?

only 5% of the time or less

Frequentists are scum

Beautifully explained. Keep up the good work.

I did not understand why 'shrinkage' of player mean towards group mean is advantageous. Can anyone put light on that?

Hierarchical models are a way of using all the players' data to simultaneously inform each individual player's estimate. The individual estimates get pulled toward the group mode(s). That "shrinkage" attenuates the extremeness of outliers. Assuming that at least some of those outliers were produced by randomly extreme data, the shrinkage has reduced false alarms. This is not to say that any particular hierarchical model is "correct". Merely that hierarchical models are a reasonably way to formally implement the prior knowledge that individuals fall into particular groups. For example, suppose you learn that a player named Varun got 1 hit in 3 at-bats. What is your estimate of Varun's probability of hitting the ball? Now suppose I tell you that Varun is a young kid at a neighborhood playground, or I tell you that Varun is a professional player. Your estimate changes, depending on your knowledge of what other players inform the estimate. Shrinkage pulls the estimate toward the mode of the other players in the group.

John K. Kruschke Thank you very much. That is the entire point of hierarchical models. I understood now. I will be very glad to see an online course in coursera on this topic from you.

Very interesting and thought provoking. Now I need to think of how does this scale up to multivariate cases

I'm enjoying your videos and your book. Have you ever thought about making a mooc about Bayesian methods? U.C. Irvine seems to be producing come courses with coursera already!

That was excellent, thank you!

Dr. Kruschke, will you be publishing your simulation study (the one presented in this part, regarding the stopping decisions)?

Great stuff John. Thanks for putting your time into this clear presentation.

amazing clarity!

Hi Nicole, glad it helped. Thanks for your comment!

Bayesian methods are great for binary data like proportions. The first half of the book (Doing Bayesian Data Analysis) develops all the fundamental concepts of Bayesian analysis using binary data. For count data and chi-square tests, Chapter 22 provides a Bayesian approach and references to further info.

The most recent version of RStudio has changed some of the pull-down menus. In particular, setting the working directory is different than what is shown at 5:35 in this video. In the most recent RStudio, Set Working Directory is under Session, not under Tools.

ruclips.net/video/eKZoQ1ztzQo/видео.html

Hey John, have you tried (r)STAN (mc-stan.org/) already? Should work pretty much as a drop-in replacement for rjags and should yield much better convergence.

Thomas Wiecki Yes, I'm trying it out. From your experience, have you found any hitches in using it? How about in learning to use it? Thanks.

John K. Kruschke So far it's been a pleasure and converting models from JAGS is simple enough. Also, the interaction between R and STAN is much better than rjags, which I find quite annoying at times (e.g. coda).

I much prefer the modelling language in STAN to that in BUGS / JAGS. Explicit data, parameter and model blocks, as well as variable declarations (e.g., floating point or integer, and setting limits) are great. The compiler seems to be a lot more informative as to errors; for example, if you've accidentally passed STAN a data value that's out of range of allowable values it will tell you so (because you coded it in your data declaration). Finally, in my experience models in STAN converge far faster (produce more effective samples in less iterations) than in JAGS. I heartily recommend STAN.

If Python is your tool of choice (rather than R), you may be interested to check out our Python implementation of this based on PyMC. It's available at github.com/strawlab/best .

Is there a version of BEST for Matlab?

I'll check it out. I prefer to use Julia, and would like version of this.

For paired comparisons, each individual has two scores, but you compute the difference and each individual thereby has a single difference score. You then do a one-group version of BEST to estimate parameters for the difference scores (analogous to a 1-group t test). Web links cannot be posted in comments, so for complete info go to the blog "Doing Bayesian Data Analysis" and search for "one group BEST". Thanks!

Thank you very much for BEST. Although this is great for testing the difference between two group, I really don't understand how to apply this in paired comparison tasks.

great stuff - recommend the Happy Puppies book - although we now refer to it as the "dog book"!

the book got introduced to me as "the dogs" book :D

Thank you, the appendix is very helpful. I hope you will keep posting videos on Bayesian methods!

The Bayes factor approach collapses across the parameters in a model, and gives only an overall relative probability of one collapsed model relative to another collapsed model. The Bayes factor is very sensitive to the choice of prior distribution assumed for the two models, but HDIs tend to be robust against changes in mildly informed priors. For details see Appendix D of the JEP:Article and this: Bayesian assessment of null values via parameter estimation and model comparison, at my web site.

Thank you for this very informative video. One thing that I do not entirely understand is how PDFs and HDIs relate to the formula in which the Bayes factor updates the prior probability into a posterior probability. I learned that Bayesian inference is based on the Bayes factor and thought of the prior probability and posterior probability as single values, not distributions. Can you explain how this relates to inferences based on the HDIs and ROPEs?

Thank you for making it from our view, now it seemed to make more since & easier to learn.

best video i've seen you made it really simple!!