- Видео 76

- Просмотров 270 991

Gabriel Mongaras

США

Добавлен 14 янв 2021

Just some guy making exploring and making videos about current AI topics.

MiniMax-01: Scaling Foundation Models with Lightning Attention

MiniMax-01: arxiv.org/abs/2501.08313

Lightning attention: arxiv.org/abs/2405.17381

Lightning attention v2: arxiv.org/abs/2401.04658

Lightning attention code: github.com/OpenNLPLab/lightning-attention

Huggingface page with models: huggingface.co/papers/2501.08313

Notes (lightning attention): drive.google.com/file/d/1DMPLg-wQ5NywKguB1ZxH0zRvrgIeo0du/view?usp=drive_link

Notes (Minimax): drive.google.com/file/d/1DINkwWd2o-1EVyFPiEu33Q7QYMO6HDUZ/view?usp=drive_link

00:00 Intro

01:30 Linear attention

15:04 Lightning attention

29:11 Lightning attention code and some remarks

34:20 MiniMax

Lightning attention: arxiv.org/abs/2405.17381

Lightning attention v2: arxiv.org/abs/2401.04658

Lightning attention code: github.com/OpenNLPLab/lightning-attention

Huggingface page with models: huggingface.co/papers/2501.08313

Notes (lightning attention): drive.google.com/file/d/1DMPLg-wQ5NywKguB1ZxH0zRvrgIeo0du/view?usp=drive_link

Notes (Minimax): drive.google.com/file/d/1DINkwWd2o-1EVyFPiEu33Q7QYMO6HDUZ/view?usp=drive_link

00:00 Intro

01:30 Linear attention

15:04 Lightning attention

29:11 Lightning attention code and some remarks

34:20 MiniMax

Просмотров: 722

Видео

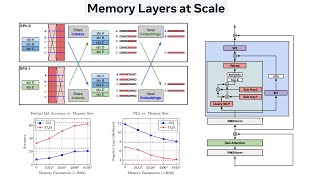

Memory Layers at Scale

Просмотров 84321 час назад

Paper: arxiv.org/abs/2412.09764 Code: github.com/facebookresearch/memory Blog: ai.meta.com/blog/meta-fair-updates-agents-robustness-safety-architecture Notes: drive.google.com/file/d/1ClsRm7tHiQZh2GegtF1hwKOqsT6AVwcg/view?usp=sharing 00:00 Intro 05:15 Methodology 20:48 Making this memory efficient 29:55 Parallelization 34:41 Stabilizing and making more performant 37:00 Results 44:14 Conclusion

Byte Latent Transformer: Patches Scale Better Than Tokens

Просмотров 2,1 тыс.28 дней назад

Paper here: arxiv.org/abs/2412.09871 Code: github.com/facebookresearch/blt Notes: drive.google.com/file/d/1B5BdO9FtmxTJiWwVJ3Wa-v3pqaRdbWMh/view?usp=drive_link drive.google.com/file/d/1BBYwr5botkuvI8CkjarIFNiN6B7uliWr/view?usp=drive_link 00:00 Intro 1:15 Current tokenization strategies 02:48 Methodology 8:08 Patching strategy 15:28 N-gram informed byte encodings 22:09 Encoder, global transforme...

Scaling up Masked Diffusion Models on Text

Просмотров 654Месяц назад

Paper here: arxiv.org/abs/2410.18514 Code: github.com/ML-GSAI/SMDM Notes: drive.google.com/file/d/19n24Quv_ZoLGO8epowB3ImmLHbAeUYWC/view?usp=drive_link 00:00 Intro 06:54 Methodology 21:40 Inference 28:20 CFG 33:40 Results

TokenFormer: Rethinking Transformer Scaling with Tokenized Model Parameters

Просмотров 1,5 тыс.2 месяца назад

Paper here: arxiv.org/abs/2410.23168 Code: github.com/haiyang-w/tokenformer Notes: drive.google.com/file/d/17PsGwefQJoSQxBHykoSFeMrKZhPDFx-E/view?usp=sharing 00:00 Intro 02:48 Methodology 7:54 This is an MLP 10:18 How they change the transformer 16:00 Model scaling 20:48 Results

Round and Round We Go! What makes Rotary Positional Encodings useful?

Просмотров 9012 месяца назад

Paper here: arxiv.org/abs/2410.06205 Notes: drive.google.com/file/d/152NPPyNjo-N6MMIaupXacS41BUJgjE5l/view?usp=drive_link 00:00 Intro 01:09 RoPE: Rotary Positional Embeddings 10:37 Notes on RoPE 12:04 Does RoPE decay with distance? 14:14 How are different frequencies used? 17:02 High frequencies: positional attention 21:29 Low frequencies: semantic attention 28:00 p-RoPE 30:36 Thoughts on this ...

Deterministic Image Editing with DDPM Inversion, DDIM Inversion, Null Inversion and Prompt-to-Prompt

Просмотров 1,9 тыс.5 месяцев назад

Null-text Inversion for Editing Real Images using Guided Diffusion Models: arxiv.org/abs/2211.09794 An Edit Friendly DDPM Noise Space: Inversion and Manipulations: arxiv.org/abs/2304.06140 Prompt-to-Prompt Image Editing with Cross Attention Control: arxiv.org/abs/2208.01626 00:00 Intro 01:24 Current image editing techniques 11:42 Deriving DDPM and DDIM 23:08 DDIM inversion 32:46 Null inversion ...

Attending to Topological Spaces: The Cellular Transformer

Просмотров 7385 месяцев назад

Paper here: arxiv.org/abs/2405.14094 Notes: drive.google.com/file/d/12g_KkHqXD6mEDILJzYbCC08i8cDHITfC/view?usp=drive_link 00:00 Intro 01:39 Cellular complexes 07:26 K-cochain 13:26 Defining structure on the cell 20:28 Cellular transformer 34:18 Positional encodings and outro

Learning to (Learn at Test Time): RNNs with Expressive Hidden States

Просмотров 3,3 тыс.6 месяцев назад

Paper here: arxiv.org/abs/2407.04620 Code!: github.com/test-time-training/ttt-lm-pytorch Notes: drive.google.com/file/d/127a1UBm_IN_WMKG-DmEvfJ8Pja-9BwDk/view?usp=drive_link 00:00 Intro 04:40 Problem with RNNs 06:38 Meta learning and method idea 09:13 Update rule and RNN inner loop 15:07 Learning the loss function outer loop 21:21 Parallelizing training 30:05 Results

WARP: On the Benefits of Weight Averaged Rewarded Policies

Просмотров 7746 месяцев назад

Paper here: arxiv.org/abs/2406.16768 Notes: drive.google.com/file/d/11UK7mEZwNVUMYuXwvOTfaqHhN8zSYm5M/view?usp=drive_link 00:00 Intro and RLHF 17:30 Problems with RLHF 21:08 Overview of their method 23:47 EMA 28:00 Combining policies with SLERP 37:34 Linear interpolation towards initialization 40:32 Code 44:16 Results

CoDeF: Content Deformation Fields for Temporally Consistent Video Processing

Просмотров 8276 месяцев назад

Paper: arxiv.org/abs/2308.07926 Paper page: qiuyu96.github.io/CoDeF/ Code: github.com/qiuyu96/CoDeF My notes: drive.google.com/file/d/10PMKdd5XBd6Y60HlRB9IW9naR2bWziDT/view?usp=drive_link 00:00 Intro 03:00 Method overview 08:40 Method details 15:24 Tricks done for training and how to actually train this thing 19:24 Flow loss and masking 25:10 Conclusion

Mamba 2 - Transformers are SSMs: Generalized Models and Efficient Algorithms Through SSS Duality

Просмотров 10 тыс.7 месяцев назад

Paper here: arxiv.org/abs/2405.21060 Code!: github.com/state-spaces/mamba/blob/main/mamba_ssm/modules/mamba2.py Notes: drive.google.com/file/d/1 XGPFeXQyx4CPxgYjzR4qrLd-baLWQC/view?usp=sharing 00:00 Intro 01:45 SSMs 08:00 Quadratic form of an SSM 15:02 Expanded form of an SSM 24:00 Attention - it's all you need?? 29:55 Kernel attention 32:50 Linear attention 34:32 Relating attention to SSMs 38:...

CoPE - Contextual Position Encoding: Learning to Count What's Important

Просмотров 1,5 тыс.7 месяцев назад

Paper: arxiv.org/abs/2405.18719 My notes: drive.google.com/file/d/1y9VHZc7MLqc6t2SHHdlVTYeW3czmmRbl/view?usp=sharing 00:00 Intro 02:44 Background 09:58 CoPE 24:50 Code 32:16 Results

NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models

Просмотров 9337 месяцев назад

Paper: arxiv.org/abs/2403.03100 Demo: speechresearch.github.io/naturalspeech3/ Code: huggingface.co/spaces/amphion/naturalspeech3_facodec My notes: drive.google.com/file/d/1xnzErd_86B6eLwqpLckhoEQKqkxFPyM_/view?usp=drive_link 00:00 Intro 05:34 Architecture overview 18:45 GRL and subspace independence 24:45 Discrete diffusion Model 41:00 factorized diffusion model 44:00 Conclusion and results

xLSTM: Extended Long Short-Term Memory

Просмотров 2,1 тыс.8 месяцев назад

Paper: arxiv.org/abs/2405.04517 My notes: drive.google.com/file/d/1wFYvU_1oUWcCNuQ91zTpSGAeNUsPjlt3/view?usp=drive_link 00:00 Intro 05:44 LSTM 13:38 Problems paper addresses 14:12 sLSTM 23:00 sLSTM Memory mixing 27:08 mLSTM 35:14 Results and stuff

LADD: Fast High-Resolution Image Synthesis with Latent Adversarial Diffusion Distillation

Просмотров 1,1 тыс.8 месяцев назад

LADD: Fast High-Resolution Image Synthesis with Latent Adversarial Diffusion Distillation

Visual AutoRegressive Modeling:Scalable Image Generation via Next-Scale Prediction

Просмотров 3,7 тыс.8 месяцев назад

Visual AutoRegressive Modeling:Scalable Image Generation via Next-Scale Prediction

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

Просмотров 3,8 тыс.9 месяцев назад

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

Mixture-of-Depths: Dynamically allocating compute in transformer-based language models

Просмотров 2,2 тыс.9 месяцев назад

Mixture-of-Depths: Dynamically allocating compute in transformer-based language models

Stable Diffusion 3: Scaling Rectified Flow Transformers for High-Resolution Image Synthesis

Просмотров 6 тыс.9 месяцев назад

Stable Diffusion 3: Scaling Rectified Flow Transformers for High-Resolution Image Synthesis

GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection

Просмотров 1,1 тыс.9 месяцев назад

GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits and BitNet

Просмотров 6 тыс.10 месяцев назад

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits and BitNet

DoRA: Weight-Decomposed Low-Rank Adaptation

Просмотров 2,2 тыс.10 месяцев назад

DoRA: Weight-Decomposed Low-Rank Adaptation

OpenAI Sora and DiTs: Scalable Diffusion Models with Transformers

Просмотров 13 тыс.11 месяцев назад

OpenAI Sora and DiTs: Scalable Diffusion Models with Transformers

A Decoder-only Foundation Model For Time-series Forecasting

Просмотров 4,8 тыс.11 месяцев назад

A Decoder-only Foundation Model For Time-series Forecasting

Lumiere: A Space-Time Diffusion Model for Video Generation

Просмотров 69011 месяцев назад

Lumiere: A Space-Time Diffusion Model for Video Generation

Exphormer: Sparse Transformers for Graphs

Просмотров 46711 месяцев назад

Exphormer: Sparse Transformers for Graphs

Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads

Просмотров 2,1 тыс.11 месяцев назад

Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads

In the DDPM inversion section, the "reconstruction" process you are describing aligns more closely with CycleDiffusion rather than the approach in the "Edit-Friendly DDPM Noise" paper. In that paper, each x_t is part of a distinct stochastic process, essentially belong to a different backward/forward trajectory. To quote the paper: "Note that despite the superficial resemblance between (6) and (2), these equations describe fundamentally different stochastic processes. In (2), every pair of consecutive \( \epsilon_t \)’s are highly correlated, while in (6), the \( \tilde{\epsilon_t} \)’s are independent. This implies that in our construction, \( x_t \) and \( x_{t-1} \) are typically farther apart than in (2), so that every \( z_t \) extracted from (5) has a higher variance than in the regular generative process. A pseudo-code of our method is provided in Alg. 1."

LOL. Mamba 2 is out

Amazing video as always, thank you so much for sharing with us!

Love the detailed explanaton! Keep the good work

Very useful discussion.

Thanks for great explanation!

Awesome job in your explanation, thanks!

noicely done

Very interesting. I've been seeing a lot of papers talking about "dynamically allocating compute" and this is a somewhat persuasive method, but I wonder if this couldn't be achieved more effectively just using a MoE where one of the experts happens to be the identity, with an auxiliary loss to encourage it to use the identity if needed.

I do see that they do this in the paper, but I'm confused why this wasn't the approach they present on in the paper; the math doesn't require that weird softmax->sigmoid hack to make inference work, and it performs better, so why didn't they include more exploration of that? Plus they have two different variations on MoDE but don't specify which they used for the results they show... it feels strange that the most persuasive thing in the paper for me is such an afterthought.

I do think the proposed method is quite hacky with the sigmoid trick they do. MoDE seems much more natural and in Fig (7) it does better than the standard approach they take. Wish they went more in depth into MoDE. Maybe they're working on a future paper with MoDE and that's why they barely included it?

@@gabrielmongarasI agree. I had a mental image of them thinking of it at the last moment before publishing and not wanting to throw out all their previous work! But it's maybe more charitable to imagine they wanted to be able to compare against a baseline dense attention layer instead of just a MoE layer.

That's probably more likely lol. Found that it worked better and just put it in as a side note since they would have to redo a lot of parts of the paper.

This is a really interesting method-- one thing I don't quite understand though; you mention that it's independent of sequence length. How is that the case? I'm unclear on how you could generate a sequence without knowing the length going in. I'm also curious about conditional sampling-- It seems like if you want to seed part of the input going in, you need to know its exact position in the input sequence going in. Is there any way to, for example, seed that the word "cat" must appear *somewhere* in the input sequence, but at an unknown location? ...maybe a way to do that is to allow the model to pad [max_sequence_length -1] at the start and end of each string... Feels like there's got to be a better solution though.

By "independent of sequence length" I was moreso talking about the inference time. That is, it could require the same number of step to generate a sequence of length 5 as it does for a sequence of length 100, theoretically. You're right about needed to know the sequence length before going in, that's how you initialize the noise for the model to process. It seems the only way to do conditional sampling is by putting tokens in arbitrary locations. One can think of this as starting from an intermediate step in the diffusion process, thus imposing a conditional generation on that token. So it'll likely generate a coherant sequence around whatever token you place in whatever location you place it. I would think of it more as a soft position. Like you have to assign the position of the seeded words an integer value, but the sentence will be built around it in some way. It doesn't really need to be the location of the word in the sentence due to the model building around the seeded word. As for wanting just the word "cat", I suppose one could increase the likelihood of said word being generated, but beside that I cannot think of a way unless you know the general location of the word. A much less mathematical and satisfying method is that you could also prompt it by making the first few tokens as "the following is about a cat: ". In the future, conditional generation based off a prompt could lead to much better results.

Last hope for nerds like us 🙏🏻

I might have misunderstood, but could you explain why e (the dimention of K, V, Q and z_t) < d (the dimention of x_t)? Am I correct in thinking that e should be the same as d because we want to make each RNN layer the same size?

The goal of the internal RNN is to learn a compression task. If we use low-rank projections, it forces the model to choose what information to remember rather than attempting to keep all information. By down projecting, the model can control the corruption of the input, x_t, in some way for the internal loop to learn, and thus store in its hidden state. Intuitively, the K down projection corrupts the input x_t and the V down projection informs the inner loop what to remember.

@@gabrielmongaras Thanks for the reply! I get the intuition of down projection. But my following question is: If K_t and V_t have the dim e, and e is smaller than d which is the dim of x_t. Then f(_, W_t) is a mapping from dim e to dim e. Then z_t = f(Q_t, W_t) also has dim e. But to maintain the same size of each RNN layer, z_t is supposed to have the same dim as x_t. How to resolve this difference? In addition, why it seems that z_t = f(θ_Q * x_t ;W_t) has the same dimetion as x_t in Equation 5 of the paper: "Lastly, the training view θ_K * x_t has fewer dimensions than x_t , so we can no longer use the output rule in Equation 1. The simplest solution is to create a test view θ_Q * x_t , and change our output rule to: z_t = f(θ_Q * x_t ;W_t)." ?

This is one thing I think is a very ambiguous in the paper. If θ_Q * x is of dimension e and W_t is of dimension e x e, the output z_t would be of dimension e as shown in equation (5). Looking at the psuedocode, you will also get that the output, z_t, is of dimension e, not d. I remember looking at their code when the paper came out and not getting a good idea of how they got around this issue. Taking another scan of their code, it looks like they get around this issue by setting e = d which is really lazy. If you change the width where e < d, the code doesn't work. In practice, I would imagine making the output projection an e-->d projection rather than the usual d-->d projection. Now that you point it out, I am curious if the experiments they ran were even on e<d. I know that RWKV-7 uses a similar idea and that it has good results. Taking a look at their code, I think they also have e = d. Perhaps the down projection isn't needed, though I would imagine this could run into issues since the model can make the corruption trivial, not updating the hidden state, but perhaps this is also a good thing if a token should not be stored at all. Nonetheless they should've mentioned this in the paper/code.

Awesome video! I think that in 27:10 you got wrong the mixture of data percetages. It is not 40% real data - 60% synthetic data but 80% real data - 20% synthetic data: "The training loader samples 80% real data and 20% synthetic, with the real data mixture providing equal weights to the groups: hourly + sub-hourly, daily, weekly, and monthly datasets".

Interesting

Thanks for covering this paper. Unfortunately they are a bit late to the game, since this is essentially a less impressive version of Tokenformer (all of their results are consistent with the results from that paper). Interestingly, Tokenformer also found that softmax was not a good solution to the parameter experts, instead using something based on GeLU, and showed that all linear layers could be replaced with this "memory" layer rather than only focusing on a select few (maybe this paper saw degradation due to the shared token weights?). Also, the authors didn't really provide convincing evidence for the benefits of their sparsity scheme. I get the idea of the reparameterization, but they can only claim the 1.3B active parameters per token. Summarizing a large input sequence or continuous batching could at worse require all 128B parameters to be touched for a single forward pass (this is a problem with MoE models - they really only look good on paper). Perhaps there is a tradeoff that can be made (like you suggested) with MoE and this sparse token formulation, where sparse tokens are shared amongst a batch (sort of like a capacity objective)? As for the "skip connection", that's essentially a GLU, which is expected to perform better in most cases (it can perform worse for some diffusion models due to interference).

I agree that this is basically a less impressive version of TokenFormer, however I think there are some parts that add value still such as the low rank split and the way they shard across devices. I think the main thing is, as you said, the results between the two papers are consistent which shows that this idea works! (though it's just an MLP with a subset number of output functions). I really don't like how both these papers formulate this block as an attention block since it's an MLP. Looking at it from an MLP point of view, it makes a lot of sense GELU would be better than softmax as softmax is quite bad for hidden activation functions. I also agree, I think the reason they cannot replace all layers is due to the shared weights! Looking at the formulas, there's a connection between an MLP (dynamic queries, no dynamic values), MoE (dynamic queries, dynamic values, subset keys/values), this/token former (dynamic queries, no dynamic values, subset keys/values), and attention (dynamic all, no subsetting). I'm thinking there's some combination of these block types that would work best.

@@gabrielmongaras I agree, although I expected a more significant contribution given that this paper was from FAIR. If it were from a university, then I would hold it to a lower standard. I don't think there is anything wrong with unifying the concepts as attention, although it would probably be more accurate for the authors to refer them to them as KV stores (attention is a dynamic KV lookup). This similarity breaks when considering GLU though. The interesting thing about softmax, is that even though it's not a good hidden activation function, it still works. In fact, you can remove the FFNs from a transformer and it will still "work", just not nearly as well (I accidentally made this mistake with a ViT - a typo). Regarding combining the blocks, the keys will probably be in finding a hardware aware approach which works well for training, metrics, and throughput / latency. In diffusion for example, time MoE is a smarter choice than spatial MoE. But sharing a fixed spatial knowledge bank across spatial and time dims could work (assuming there's an upper bound for a fixed resolution). It's harder to make such comparisons with AR decoders though, since the sequence length is unbounded.

@@gabrielmongaras I am really interested in the connection you point out (and I first really understood from watching your tokenformer video) and even though the pattention is "just" an MLP, it is somehow a really interesting way to think of it. Are you aware of work that explores these connections more? Like is there some uberformula that can capture all the variants as special cases? Since there is this dichotomy of dynamic vs static keys and values between MLP and attention, it then seems like you could (in the uberformula) maybe do a mixture like 75% dynamic and 25% static. Maybe a first pass is sigma((QAx + Q')(KBx + K'))(VCx + V') where the primes are the static values and A,B,C are selecting a subset of tokens. The static matrices might be better combined with concat than + but with + they would be zero padded either in the hidden or token dimension appropriately. Hmm idk that all looks wrong but I'll leave it for fun. Thanks for making these paper videos, they are an awesome resource

I do not know of a current work that looks at the connections. Most seem to say they take the attention architecture and manipulate it, but both this paper and the tokenformer one make it seem like a new architecture block rather than showing it's mathematically equivalent to an MLP. I suppose the uberformula is a three-way matrix multiplication: O = f(Q(x) @ K(x).mT) @ V(x). Attention has all three as a function of the input: Q(x) = W_Q @ x, K(x) = W_K @ x, V(x) = W_V @ x with f = softmax Standard MoE has static keys (router): K(x) = W_K with f = softmax and tokens routed to different V functions depending on the output of f(Q(X) @ K) A transformer MLP (no GLU) has the keys and values static: K(x) = W_K, V(x) = W_V with f = silu If we add GLU, then we would get something a little different, but as @hjups mentioned above, GLU is not always an improvement. Attention is good for routing info between tokens why MLPs are more useful for fact retrieval an dimension interactions. Since there is this notion of a somewhat unified formula, I am curious if there is a mix of blocks of this type that is optimal rather than the normal Attn, MLP, Attn, MLP, ...

@@gabrielmongaras related "Understanding and Improving Transformer From a Multi-Particle Dynamic System Point of View" and "Improving Transformer Models by Reordering their Sublayers" Thinking about this again, another way to phrase the mixture I was thinking would be like tokenformer, but instead of only using static "tokens" (rows of W_K) for the cross attention, concat W_K' to X (so the sequence length goes from T to T+N where W_K has N rows) and likewise for W_V. All the queries are dependent on X. The first T keys are dependent on X, but the last N are not. The first T values are dependent on X, but the last N are not. The attention mask would leave the last N columns of the T+N unmasked for all tokens. With N=0 and standard attention mask, you recover self attention. With nonzero N and an attention mask with the (T,T) left hand part all zeroed/-inf, you recover an MLP. With a standard attention mask and nonzero N, you get (as you vary the ratio T/N) some combination of the two f((W_Q @ x) @ concat(W_K @ x, W_K')) @ concat(V @ x, W_V') This is only a mix of attn and MLP, to get a mixture with MoE you would need static keys and nonstatic values, like f((W_Q @ x) @ concat(W_K @ x, W_K', W_K'')) @ concat(V @ x, W_V', W_V'' @ x) so then the only pattern missing between keys/values of dynamic/dynamic static/static static/dynamic is dynamic/static so then f((W_Q @ x) @ concat(W_K @ x, W_K', W_K'', W_K''' @ x)) @ concat(V @ x, W_V', W_V'' @ x, W_V''') (transpose the K term appropriately in all of the above)

This paper is fascinating-- thank you for the breakdown! This moe-like approach for long-term memory is very compelling, but I'd love to see something that can use this type of scalable sparse memory for its active memory / online learning. I've been thinking about the idea of something similar to the "Learning to (learn at test time)" paper, but using something like a MoE layer or this sparse memory block as the internal memory module.

I think it would be cool to see how a model like that performs! Having learnable dynamic compute on a hidden state or a hidden state that updates some larger memory and extracts from that larger memory. I know the latest RWKV is using the idea from "Learning to (learn at test time)" so this approach could be promising.

@gabrielmongaras ooh I haven't read RWKV yet, I'll have to take a look.

@@nathanwatts5720 I don't think there's a modern RWKV paper at the moment. The old versions of RWKV are mid but the new versions seem a little interesting. However (to the best of my knowledge) we only have the website and code to go off of. Would love to cover RWKV when a new paper comes out! www.rwkv.com/

Wow

These detailed paper explainations are really helpful. Keep doing this please.

Thanks!!!

Dear Gabriel, I have been following your paper readings recently and I am amazed how much math you have simplified and explained so well. Can you provide any advise on reading and understanding whitepapers? Thank you.

I mainly read papers from beginning to end the way they're written. 1. read the abstract, see what's going on. I usually have a somewhat decent idea of how they may be doing the things in the abstract 2. look at related work if there's something I'm not too familiar with, but I mostly skip this part. 3. Look for picture and formulas. Every time there's an important formula, I ether find a picture related to it or draw one out. I also usually understand how things are working via how the shapes are manipulated so I try to write those out. Any big formulas that look like a mess, I just break down from the inside-out (for example, break down inside a summation, then see what the big picture is including the summation) 4. If there's code I will look at that. Sometimes it can clear up ambiguous problems left in the paper 5. Finally I look at the results (which I may look at first to see if the paper is even worth reading). Mainly how much did they scale their architecture and what datasets. Ask questions about the results. For example is the paper trying to deceive you with axes in some way? Are the dataset actually meaningful? Hope this helps!

@@gabrielmongaras Thanks a lot for your detailed response. This will definitely help. Your work is unique for me.

I don't get how they actually generate tokens with their transformer. In a normal transformer, your end result is each token predicting the next token. But here its each token predicting what? An entire high resolution image? And how is this happening in parallel? Is each token in the next resolution not attening to its own tokens, how does each token in the next resolution know what position it's in? Is it each token in the previous resolution predicting its 4 closest patches in the next resolution? This architecture is nothing like a traditional transformer and they spend no time explaining the difference. Very poorly written paper in my opinion, however good their benchmarks may be.

Very helpful. Thanks!

Thank you for your interpretation. It help me better understand this paper. Btw, could you share which PDF annotation app you’re using on your iPad? It looks quite handy.😆

the most detail and easiest presentation of this paper! Thank you

Can you share code implementation of DiT?😢

I think I forgot to add it to the comments. Here's the official repo for the DiT paper: github.com/facebookresearch/DiT

I want to mention something about inference that I didn't mention in the video well. For inference, the patches are causal (take a look at figure 2 in the paper). This means the ith patch needs to make predictions for the (i+1)th patch after being passed through the latent transformer. Thus when doing inference, multiple bytes are decoded for a single patch (basically decode until high entropy if using the high entropy method). Let me give an example: Let our sequence be "a b c d e f" let patch 1 (P1) correspond to "a b c" let patch 2 (P2) correspond to "d" let patch 3 (P3) correspond to "e f" (note that this means a, d, e, and the byte after f are high entropy) After the encoder, we have a sequence [P1, P2, P3] (as well as encoder hidden states [Ea Eb Ec Ed Ee Ef] This means we are training the latent transformer to learn the map [P1, P2, P3] --> [P'2, P'3, P'4] where P'N is used to predict PN via the decoder just like in a normal causal transformer (except we map patches to logit patches rather than tokens to logit tokens and we use a large decoder to decode these logits rather than an unembedding layer) The decoder then receives [P'2, P'3, P'4] and maps this to: P'2 --> "d" P'3 --> "e f" P'4 --> (I never defined this but you get the idea) Thus for inference, let's say we have "a b c d" We then patchify like above via the encoder. The encoder has two outputs: 1. "a b c d" --> ["a b c", "d"] = [P1, P2] 2. [Ea Eb Ec Ed] We pass [P1, P2] through the latent transformer to get [P'2, P'3]. We already have P2 so we just use P'3. We then pass P'3 through the decoder along with the output of the encoder [Ea Eb Ec Ed] to get a prediction for the next byte on the last token (the transformed version of Ed after sending it through the decoder with P'3. This gives us a prediction of"e" (which is low entropy, thus we keep P'3) We then pass "a b c d e" through the encoder again which gives us: 1. [P1, P2, P3] which is useless since the last prediction was low entropy 2. [Ea Eb Ec Ed Ee] Reusing P'3, we pass [Ea Eb Ec Ed Ee] through the decoder. We then unembed the decoder output corresponding to "Ee" which gives us "f". This is low entropy again so we reuse P'3 one more time. The last iteration predicts "???" using P'3 (whatever is after "f"), which is high entropy, thus we restart the entire loop, create P'4 (from the bytes "e f"), and begin decoding from there. Note that this means the decoder cross attention mask is the transpose of the encoder cross attention mask, shifted over by one patch. This means that in the example above, P1 would be receiving information from bytes "a b c". After the encoder we then have [Ea Eb Ec] and P'2. P'2 is sending information to Ed (encoder output of"d") which is informing Ed how to decode "f". For P2, this would be receiving information from bytes "d" and the output of the latent transformer is P'3. This patch then sends information to Ee and Ef to inform these bytes how to decode Ee --> "f" and Ef --> "???". Basically each latent patch prediction informs how to decode the rest of the patch and the first token of the next patch. The decoder mask looks like this: P'2 P'3 P'4 Ea --> decodes to "b" of P1 Eb --> decodes to "c" of P1 Ec --> decodes to "d" of P2 - this is high entropy, end patch 1 Ed 1 --> decodes to "e" of P3 - this is high entropy, end patch 2 Ee 1 --> decodes to "f" of P3 Ef 1 --> decodes to "???" of P4 whereas the encoder mask is Ea Eb Ec Ed Ee Ef P1 1 1 1 P2 1 P3 1 1

Thanks for the explanation, but I still dont get it. We train "global, big gpt-like transformer" to respresent i-th patch, but when inferencing trying to predict i+1 patch?

Think of the big global transformer as a "next token predictor" but instead of that token being decoded into the next word/word piece, it's decoded into a sequence of bytes. The encoder and decoder are similar to the embedding and unembedding layer to encode/decode a token.

Thanks for great summary and explanation!

This model produce one byte auto regressively will that be very slow inference?

You're right that naive decoding byte-by-byte would run into very slow inference. I added a comment to the video on a detailed way inference is run. I think that shows that inference is pretty fast since we do next patch prediction on the latent transformer, which is decoded into many bytes. If, on average, the token size is larger than the patch size, it will be probably more efficient than a normal token-wise transformer (since the encoder/decoder are relatively lightweight)

@@gabrielmongaras I think this is what is happening, once big transformer produces next patch, then small decoder produces next byte, you autoregress it to small encoder, and gives its output to small decoder, skipping big transfomer, till bytes of produced patch is exhausted in some sense.

Yep! The way I think about it is the latent transformer is the "normal" transformer in a text generation sense. The encoder and decoder act like the embedding and unembedding matrices. The transformer is sort of a "next patch" predictor. The ith patch is transformed into a latent used to predict the (i+1)th patch, like how in a normal transformer the ith token is transformed into a latent used to predict the next token. However, the "next patch latent" is used to autoregressively predict next bytes (via the encoder and decoder), like you mention, until it reaches a high entropy byte. From my current understanding, this means the ith patch is transformed into a latent that predicts the bytes of the (i+1)th patch and the first byte of the (i+2)th patch as you don't know a patch has ended until you hit this high entropy prediction.

Thank you <3

In decoder cross attention is there any masking like in encoder cross attention?

Since the decoder is sending information from patches to tokens instead of tokens to patches, you would want to mask it such that every token gets information from the patch that contains that token. This would be the transpose of the encoder mask.

12:30 they denote the patches via the vertical dashed lines. The patches are: SOT a e n erys_Targaryen_ is_ in_ G a me_ of_Thrones (and so on) Notably, the thing they have a little above that actually has a slightly different patch set so I'm guessing the place the horizontal red dashed line is actually different for the example right above the one shown in Figure 4. For instance, in that example the n of Targaryen is already too high entropy and therefore gets to be its own patch, so the cutoff must have been set a bit lower in that example. The rule as I understand it is simply: If the current entropy for the byte pair is higher than some fixed cutoff given by the red dashes, start a new patch on the next byte pair.

This is exactly the explanation I need!

I agree it's the best explanation

What is CFG?

Classifier Free Guidance. Mainly a method of getting good quality out of diffusion models. It basically emphasizes the given class or text some amount, c, over the null prediction the model would've made without the class information, thus making your image more representative of the given class. arxiv.org/abs/2207.12598 I think I talked about it in my SD 3 video if you're curious. There's a lot of other resources on it too!

Your videos are incredible, so meticulous and packed with clear and well thought out explanations. Keep up the great work!

What a detailed and insightful video about masked diffusion language models! Thank you so much for your excellent work!

why you can directly change x_t-1 to x_t+1 in 29:21.

It depends on if you do a forward step or an inversion step in the opposite direction using the formulas above. Both come from x_t though.

Great video. We revert to BERT.

I completely missed this video and paper. It's absolutely remarkable how two papers came up with the same analysis and conclusions at the same time! There's another paper submitted to ICLR (this one was also submitted ICLR), which presents a similar analysis for diffusion transformers, but shows that it also holds for LLMs (in the appendix). Also, both papers suggest using partial-head RoPE (p-RoPE), but this isn't a new concept since it was used by Eluther AI's GPT-J 6B model (2021). Regarding your speculations. - Yes, this does appear to be the reason why LLMs fail to extrapolate, what effectively happens is that the model learns an implicit cutoff channel r_eff during training, which it stuffs semantic information above (since the long frequencies are essentially constant). When you extrapolate, you push r_eff to be a higher value by engaging those frequencies, thereby disrupting the semantic processing. The other ICLR paper showed that if you compare the complex norms for a base model and a model trained to longer context windows, then you see progressively larger deviations in the finetune as the RoPE frequency decreases (i.e. the model is shuffling information into the lower frequency channels). - Yes, fixing the cutoff channel does help with extrapolation, at least in image models. However, this depends on the training sequence length: if you train with r_eff < r_cutoff, then the model will perform worse under extrapolation. So you will have to set r_eff == r_cutoff. But when you extrapolate, you end up with positional aliasing for N > N_train. - You don't want to learn the frequencies, because this can cause issues for generalization. If there happens to be a distinct set of spatial patterns in the training set, those frequencies will receive a higher importance during optimization. This focus will result in worse performance during inference for OOD data which does not follow the same learned spatial patterns. I guess the best way to think about it is: the RoPE frequencies form a basis (Fourier basis), and the best basis is one that spans the entire space rather than one which provides increased resolution in areas with more information (since that has to be determined by the training set). Notably, training the frequencies would be similar to training relative position embeddings (in ViTs or NLP), which can suffer from a latching behavior where they get stuck in sub-optimal states.

Thanks for the further analysis!! I need to check out that ICLR paper. Another paper to add to the to read pile! As for learning the frequencies, I was moreso thinking they could be learned to study what frequencies are preferred. Perhaps the model would bias them in some way. I did a small test case and they were all over the place which reinforces the idea that all frequencies are needed, like you said, the it's like a basis spanning the entire space.

I didn't quite understand what the quantization part is achieving. Does it serve only as the tokenization step? Or does it contribute to reducing the picture's resolution somehow?

It just serves as an easy way to tokenize the image somehow since we want to do autoregression. An easy way to make a model autoregressive on images is to use vector quanitzation, though it does reduce quality as you're reducing a continuous signal to some discrete set of tokens. It's probably far from the best way to model an image though.

I think it's ultimately an empirical choice, the model predicts next discrete token as opposed to a continuous embedding. so may benefit from predicting a distribution of token likelihoods in itself rather than being forced to output a singular continuous prediction.

amazing man, thanks so much

best

Can I ask what tablet/app you use to produce these videos? Thank you!

I'm using a Samsung tablet and just the default notes app. I tried out a few other note apps, but this one is my favorite since it's free and has all the features I need. For editing audio I use audacity and for small video editing I use shotcut.

Thanks. These are quality videos. The more you go into the details and math behind the main algorithm, the better.

I tried running this but nothing happens when I run jupyter lab. Gives me the "not a command" message.

really appreciate for explaining such papers, please keep them coming <3

Diffusion forcing? Please

8:00 "It's just MLP" yeah, I felt bamboolzed. MLP originally was upscale, relu, downscale; later got beautiful gradient-bearing curves of ReLU replacement and additional multiplication(eg GeGLU instead of GELU). Here during initial explanation I felt that up proj and down proj got renamed to K, V. It doesn't feel like cross attention at all as their K V don't depend on any part of the input. 23:01 It may be because they moved lots parms around: finetuning MLP layers works so much worse than finetuning QKVO. By having MLP inside they have more parms to play a role on multi head attention which is still used. It also might be training. Their results at image classification feel feel more revealing than NLP tasks: dataset is way more fixed than "pick your favorite part of pile, filter bad parts" and models have the same weight class, and there is no different tokenizers(they use same with pythia so comparing ppl against it doesn't raise eyebrows) In ideal world they would also train at least pythia 160M from scratch on the same training data, but considering it's "up to 300B tokens", it's not exactly surprising they didn't. Also in ideal world they would put in ablation study "hey, let's not add non-linearity to Q K V proj and instead of new weights for 3 P-attentions we simply make dimension of heads bigger keeping around the same parm count" Also Pythia has an outdated MLP architecture: it use gelu, not geglu. P attention doesn't use GeGLU as well, but their architecture replaces MLP which already is replaced in modern LLMs. Speaking of their results in images: Their winning model in table 2 image classification has more parameters that ViT. If it's excluded, their small 86M model loses to another 86M model, though their 307M model still wins. (Also I remember trying somewhat similar but more naive idea on imdb dataset: replace Q,K,Vs proj with self attentions. Idea was walk toward super hierarchical nested attention: if you replace one Q projection with attention, you can go deeper and replace projection inside nested self-attn of Q with another attention and once walking over this inception is done the initial layer knows so well what token it has to attend to other token. Worked awful. Results were worse, number of parms went through the roof(as single proj got replaced with several, though will not be surprised if better hyper parms can make it at least bad rather than awful)

Oh, they actually did train transformer from scratch: "Table 6 compares Transformer models trained from scratch and Tokenformer trained by parameter reusing with varying amounts of seen tokens during training. It is evident that Transformers trained with the same number of seen tokens do not reach the performance level of Tokenformer with parameter reusing" Even if modern MLP would improve transformer results, training costs still seems to be much better which is really impressive

I think most of the results only look impressive because they slightly scale the model above the baseline. From the experiments I've done, it seems like the activation they develop is worse than normal GeLU and moving params from the MLP into the Q K V O projections performs the same given the same # of params.