L19.5.2.5 GPT-v3: Language Models are Few-Shot Learners

HTML-код

- Опубликовано: 11 сен 2024

- Sebastian's books: sebastianrasch...

Slides: sebastianrasch...

-------

This video is part of my Introduction of Deep Learning course.

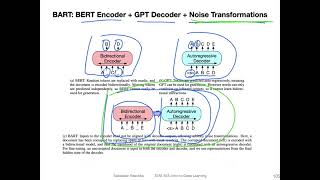

Next video: • L19.5.2.6 BART: Combi...

The complete playlist: • Intro to Deep Learning...

A handy overview page with links to the materials: sebastianrasch...

-------

If you want to be notified about future videos, please consider subscribing to my channel: / sebastianraschka

![MISSING: SLIM SHADY [Expanded Mourner’s Edition Trailer]](http://i.ytimg.com/vi/xh8qgHrOO1g/mqdefault.jpg)

This series has been super informative to get a semi-deep dive into the concepts of different transformer and NLP models. Super helpful!

Hi. Thank you for uploading the video.

I have a question. during the fine-tuning of the GPT, which the zero-shot, one-shot, or few-shot, does GPT do gradient update for the fine-tuning?

If not, it means that fine-tuning does not learn from anything but for testing its performance?

Good question. I think you are referring to the few-shot examples during testing/inference? I am 99% sure that GPT-3 does not do any gradient descent updates when it uses the examples in the context

good

Thanks for the great video! I struggle to understand how does 0, 1 or few-shot training (or it is just testing?) let the model have better predictions in few-shot versus 0-shot if weights are not updated? The only thing I can think of would be somehow combining backpropagated gradient with every new shot. How would othervise 0 or few shot example even comparable. Just everything would be 0-shot right?