Lecture 6 | Convergence, Loss Surfaces, and Optimization

HTML-код

- Опубликовано: 1 авг 2024

- Carnegie Mellon University

Course: 11-785, Intro to Deep Learning

Offering: Fall 2019

For more information, please visit: deeplearning.cs.cmu.edu/

Contents:

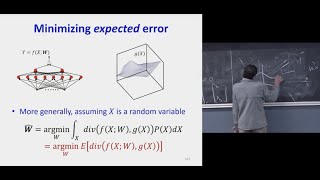

• Convergence in neural networks

• Rates of convergence

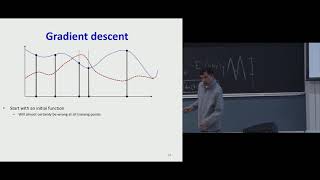

• Loss surfaces

• Learning rates, and optimization methods

• RMSProp, Adagrad, Momentum  Кино

Кино

If you are seeking for deep learning lectures from someone who truly understands the contents and makes connecting dots as well, here you go: Professor Raj is the best I've ever seen in deep learning. He is explaining the whole things with simple languages (very deep intuition).

Looks like this professor has become my hero for deep learning course. I started understanding his classes better than any one else's.

Amazing explanation.

The first video u click, feeling encouraged and one of the first thing you hear from the Professor is the decline of student attending the class and the sinking feeling of hope, that it would be a miracle if there would be 1 student by the end of this course. Lol.

Still excellent content.

ruclips.net/video/XPCgGT9BlrQ/видео.html 💐.

Kids run away 😂😂😂😂

That's what happens after neural networks

Back proporgation

please give and keep me updated

qed hahahaha

ruclips.net/video/XPCgGT9BlrQ/видео.html 💐.

this video didn't give theoretical guarantees for stochastic gradient descent