BEST OPEN Alternative to OPENAI's EMBEDDINGs for Retrieval QA: LangChain

HTML-код

- Опубликовано: 15 июл 2024

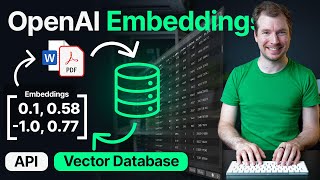

- In this video tutorial, we will explore the use of InstructorEmbeddings as a potential replacement for OpenAI's Embeddings for information retrieval using LangChain. This will be the first step in using Open Source ChatBot on your data. The results will surprise you!!!

LINKS:

Google NoteBOOK: colab.research.google.com/dri...

Instructor Embeddings: huggingface.co/hkunlp

Videos to Watch: • CHATGPT For WEBSITES: ...

LangChain Crash Course: • LangChain Crash Course...

-------------------------------------------------

☕ Buy me a Coffee: ko-fi.com/promptengineering

Join the Patreon: patreon.com/PromptEngineering

-------------------------------------------------

All Interesting Videos:

Everything LangChain: • LangChain

Everything LLM: • Large Language Models

Everything Midjourney: • MidJourney Tutorials

AI Image Generation: • AI Image Generation Tu...  Наука

Наука

Want to connect?

💼Consulting: calendly.com/engineerprompt/consulting-call

🦾 Discord: discord.com/invite/t4eYQRUcXB

☕ Buy me a Coffee: ko-fi.com/promptengineering

|🔴 Join Patreon: Patreon.com/PromptEngineering

▶ Subscribe: www.youtube.com/@engineerprompt?sub_confirmation=1

what chart software are you using ?

Finally the video everyone needed

Your videos are amazing and informative and you are providing so many tools and bits of knowledge to the community. Thank you for being dedicated to open source

Thank you very much for this video. Early waiting for the next one.

Amazing video. Thank you so much for being clear, detailed, and holistic.

This is amazing! A completely free open source knowledge engine would be a fantastic goal!

Really happy with this! I keep asking myself "What is the best alternative for OpenAi?"

You are amazing, this is beyond helpful. ty

Looking forward for your next Video (i want to use this with "Wizard-LM13B or Mtb-7b locally" but I'm not able to do that myselve :)) Thank you for you guides

Thanks for your effort.

a la espera del próximo video

Good explanation

you are awesome. like batman

Good one man, when’s the next video coming out? Eager to know the model you have chosen to use with this open embedding

Awasome video :)

You and your channel is awesome. I want to make just one comment regarding the videos. Please check why the volume is very low. Maybe due to microphone far from the face? Everything else is just next level.

Excellent video! Try MPT-7B in your next video for open sourced LLMs!

Can it be used with langchain?

Im building a website and having alternative is game changer

@Prompt Engineering great video, thanks! Can these embeddings be upserted to pinecone? What dimension needs to be used? Thanks!

Thank you for the video.

Gentle nudge, ask an AI "How to set up a compressor to get good even spoken audio at a stable level without having background noise rise during silence" .-)

Hi , thanks for this!... I want to know how to return output response with the source files name using openai langchain conversational retrieval q&A.

Hi, Thank you for your tutorials. I am following your tutorials for quite some time now. However I am unable to figure out best economic approach for my use case.

I want to create a Q & A chatbot on streamlit which answers only my custom single document of about 500 pages. The document is final and won't change. From my understanding so far, I should either choose Langchain or LlamaIndex. But, I will have to use OpenAI api to get best answers, but that API is quite costly for me. So far I have thought of using Chroma for embedding and somhow storing the vectors as pkl or json on streamlit itself for re-use, so I don't have to spend again for vectors/indexing. I don't have enough credits to test different methods myself.

Kindly guide me. Thank you.

good stuff. Hey, just wonder what diagram software did you use? tks!

The video content is amazing!👍I have a feedback on the video display quality though. It seems the screen/video recording software is recording with lower quality therefore the texts in the video is not very crystal clear. Would be great if you could fix this for future videos. Many thanks for such latest & informative content. 🙏

audio as well... but def subbing!

Did you make a video on using a open source LLM instead of openai? BTW great content!

Hey there, thanks for the video! Does this embedding model support different languages (in the paper they say its only trained on english data)? Also, where can I find the video where you explain how to use a open source LLM instead of davinci?

great vid. What is the link to your Excalidraw diagram?

Embeddings are just the beginning. We'll need to start integrating Llama index (could do a video on that?), which is also "open." It takes vector DB+langchain and supercharges things.

Yes, looking into Llambdaindex.

what's the difference with embeddings ? quality ? speed ? cost ?

@@massibob2004 Embeddings create vector databases the the model uses to quickly find information you're querying about in a given dataset... then the relevant data is chunked and prompted into the AI. Llama index utilizes/integrates this, but is also uses other data structures (like adding metadata) to help parse and find the information. Essentially more of an old-school index/catalog mixed with a vector database. This allows you to "tree" the pipeline; working on certain chunks only, breaking those chunks up even more, etc. Right now, generally folk are vectorizing and chunking say 1000 tokens worth of stuff when only 100 tokens of relevant info is in there... which isn't an efficient use of compute, and can also cause missing data.

Can you guide which is the other option for OpenAI to use while retrieving information.

The embeddings can be used in other languages, like Portuguese?

Thanks for your tutorial. In my case(2 large PDF files( 32 Mb & 54 Mb), One thing I wanted to highlight is that there is a significant difference in embedding size between instructor embeddings and OpenAI embeddings(4.6 Gb vs 13 Mb). Can you please share your thoughts on the same?

@Prompt Engineering, for some reason, when using google/flan-t5-xll within this notebook as llm, it does not print the result. It only prints the source for the created query. Is not that supposed to print results for other repos? I am not sure if you have indexed the vector and also printing the results in the notebook

Interesting, it could be that the model is not able to generate the response? Will need to look into it further.

what about speed? Are opensource self-hosted models faster? Considering I don't have a gpu

is this the best open source embedding available or are there any others that are better than this? Have you tested any others comparing them to this one

Can you do a Vector DB comparison, FAISS, Redis, Chroma, Pinecone......etc?

luv the channel.

Thank you, will look into it.

What is a good way to deal with token limits on large files?

in place of openAI devinci, which open source model we can use?

Great video, thank you. What about documents which contain math expressions and definitions? I haven't seen any related video about mathematical corpus. Also would you answer if we can use the embeddings for documents written in Greek language?

Thanks, I am not sure if it will be able to process math expression. May be if they are provided in Latex or mark-down, you would train a model. But not sure. As for the documents in Greek, I am not sure, haven't experimented with any other language and there is nothing in the documentation that I could find.

@@engineerprompt Thanks for you response. Unfortunately although I have searched a lot on the web I cant find a test case where someone uses a multilingual model for the embeddings and for the Language model. Although I have noticed that chatGPT understands prompts in the Greek language and also generates replies in the same language. Do you know perhaps how it does that? Does it use a translation model on the backend?

I want to use jina embedding locally which has 8k sequence, how can I do that , thanks

Brother i think you left api key inside in colab

Can you share the wireframe, please?

Is there a way to prompt engineer so that it will not hallucinate answers or make numbers up like that 100$.

WOW , one question, can we use this code to make a chatbot for android using kivy?

@engineerprompt Great video, Does the retriever "rank" the relevant documents? or do we need to add a ranking model

This one doesn't. You will need to add that as a secondary layer. Look into Cohere reranker or ColBERT.

how to reference file name while querying in langchain?

Is that good for languages other than English?

Anyone know the difference between a semantic index and a vector store?

One quick question i have can we use hugging face instructor embedding with gpt 3.5 turbo model i have doubt like model was trained on open ai embedding but can we use hugging face instructor embedding please clearify?

Please help me out as i have seen some videos which have used local embedding with gpt 3.5 turbo model how its possible? Like gpt 3.5 turbo is linked with open ai embedding how we can use hugging face instruct embedding on that

Hello..

I'm getting directory error:

it says " faiss_instructEmbeddings.pkl' "..This file is not with me...Could please help.

@Prompt Engineering, the qa_chain_instructEmbed and qa_chain_openai have the same llm=OpenAI. You are just comparing the 2 different embeddings but with same repository (OpenAI).

ya, I have the same doubt too

Yes, the goal was to compare the information retrieval part of the pipeline. Wanted to keep the language model the same but based on the type of embeddings, it might get different information (context) to work with. Hope this helps.

ERROR: Could not find a version that satisfies the requirement InstrcutorEmbedding (from versions: none)

Referring to your previous video about URL information retrieval using Langchain. I tried text, csv, dpf sources. I can tell you that answers are not close to real ones. More importantly, for pdf, Langchain only allowed 3 pdf to be uploaded. It does not let more than 3 pdf. I checked Ghost, and could not read more than 3 pdf. Is there any way that you can read long pdf documents with unlimited number in LLM? Do you mind giving some video about Longformer using checkpoints.

I wasn't aware that there is a limit on the number of pdf files you can read in langchain. Thanks for pointing it out. Will look into it. Will experiment with it and hopefully will find a solution.

@@engineerprompt PyPDFloader did the trick.

great! Can I use ChatOpenAI LLM? because I'm working on my personal assistant with Tools , like Zapier and Search. Would you have some advice Could I use my LangChain Agent also with Embeddings ? I just wonder do only one app/chatbot

Yes, you can do that.

Can you also do a comparison for Embeddings generator , openai, oss and sota.....etc?

Good idea, will look into that.

Good morning.

What presentation tool did you use?

I am using excalidraw.com/ for flow-chart.

Please mention the link of the video where you have mentioned using open source llm model

Checkout my localgpt project. That combines everything into a single project

@Prompt Engineering, you have left your Open API Key visible in your colab notebook. you may want to censor it....

Thanks for pointing out, somehow forgot it again.

Can someone link me to the video where he shows how to use an open source LLM instead of openai?

Check out the localgpt project videos on the channel

@engineerprompt where is the tutorial for opensource alternative for openAI that you used in this video?

Check out the videos on local gpt project. There I put together everything

does the pdf file restricted to be text base or it can read text as image pdf?

In this case, only text in PDF.

Can we prepare the embedding using cuda machine and then do the inference/QnA on a CPU machine ?

That is possible to do

Do have similar concept explained using RAG

Yes, check out the localGPT videos

I wonder how big of a text can be turned into embeddings without "losing the sense" of what's written in it.

There is some techniques to compensate for that. You can chunk the text into overlapping text embeddings and also you can index them. You can then say that the specified indexed range of embeddings is part of a whole document for example and use all the overlapping chunks to make sense of the whole text as well as individual pieces of the text.

How can we restrict the model to only answers from the questions that we have stored in the database and if someone asks questions that are not present in our PDF files so it simply gives us answers like I don't know or something like this.

Can you please guide me here?

You can do that with a PromptTemplate. Where you provide a system prompt which will instruct the system not to provide answers from its own. I would recommend to watch this video on how to give the prompt:

ruclips.net/video/j_WIl9lZtJU/видео.html

and for promptTemplates, watch this video: ruclips.net/video/5-fc4Tlgmro/видео.html

@@engineerprompt Thanks for your response,

I watched both of the videos and understand it.

But I have the doubt that how can I use prompts in RetrievalQA to restrict the model to not giving answers out of the chromadb data.

Here is a sample of my code

embeddings = HuggingFaceInstructEmbeddings(model_name="hkunlp/instructor-large",

model_kwargs={"device": "cuda"})

model = AutoGPTQForCausalLM.from_quantized(

"TheBloke/WizardLM-7B-uncensored-GPTQ",

model_basename="WizardLM-7B-uncensored-GPTQ-4bit-128g.compat.no-act-order",

use_safetensors=True,

trust_remote_code=True,

device="cuda:0",

use_triton=False,

quantize_config=None,

)

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_length=2048,

temperature=0,

top_p=0.95,

repetition_penalty=1.15,

generation_config=generation_config,

)

local_llm = HuggingFacePipeline(pipeline=pipe)

persist_directory = 'db'

vectordb = Chroma.from_documents(documents=texts,

embedding=embeddings,

persist_directory=persist_directory)

retriever = vectordb.as_retriever()

qa = RetrievalQA.from_chain_type(llm=local_llm, chain_type="stuff", retriever=retriever, return_source_documents=True)

query = "query"

llm_response = qa(query)

Can you please guide me on how can I use the prompt and how can I restrict the model to not give answers out of my Chromadb database?

Thanks for the tutorial man, can we make the pdf search app without OpenAI API key?

@Prompt Engineering

Yes, checkout videos on my localgpt project

@@engineerprompt will do thanks!

@@engineerprompt in your localgpt project you are not comparing embedding data, just comparing text to text. is there any open source model we can use to RetrievalQA.from_chain_type(llm=OpenAI) ? instead of OpenAI ?

What is the tool used for your presentation

It's called excalidraw.com

Could you share the flow diagram too? 😊

😊

@@engineerprompt hi :) could you please share the diagram? :)

Hi

Can I set the folder path to download the embedding model ? where this code download it ?

HuggingFaceInstructEmbeddings(model_name="hkunlp/instructor-xl",

model_kwargs={"device": "cpu"})

I haven't tested this with the embedding model but the approach proposed here might work:

huggingface.co/docs/hub/models-downloading

First download the model to custom path and the provide the custom path as the model_name.

Very instructive video, thank you!

When you define `qa_chain_instrucEmbed`, how can we avoid the usage of OpenAI? (to be more specific: how to avoid to pay)

Is there a way to use a local model with acceptable answers?

I tried in Google Collab

```

llm = LlamaCpp(model_path=f"{root_dir}/llama-7b.ggmlv3.q2_K.bin", verbose=True, n_ctx=1024)

qa_chain_instrucEmbed = RetrievalQA.from_chain_type(llm=llm,

chain_type="stuff",

retriever=retriever,

return_source_documents=True)

```

but the answer takes minutes to appear and is not at all relevant.

I would recommend watch my localGPT video. It will show you how to do other LLMs.

You want to look for a better model (Vicuna-7B is a good option).

@@engineerprompt Thank you very much. 🙏

I am getting some hugging face url not found error. Any idea why?

Can you provide more details?

InstructEmbedding is only 5Gb, nice to know

qa_chain_instrucEmbed = RetrievalQA.from_chain_type(llm=OpenAI(temperature=0.2, ),

chain_type="stuff",

retriever=retriever,

return_source_documents=True)

What other open source can be used instead of OpenAI in this code? Any idea?

Check this out: ruclips.net/video/Xxxuw4_iCzw/видео.html

@@engineerprompt I have gone through that, but that video is about HuggingFace pipeline. The only doubt I have do have is, in the code you wrote for qa_chain_instructEmbed (HuggingFace embeddings), you're again using OpenAI embeddings, can we run the code without OpenAI?

@@engineerprompt Hello, can you just say, what code should I use instead of OpenAI in the above code?

did u get your answer

@@nithinreddy5760

Damn man... these results are still very humbling. We need to find better ways to create the chunks (ie keep sentences and even paragraphs together) before creating embeddings from them.

But openai embedding are now so cheap, just 1$ for thousands of documents which previously would have costed 100$, and once generated embedding can be persisted locally. So in this case is it still worthwhile to use instruct etc.?

Cost is just one factor to consider. Others would be data privacy, security, and the freedom to be not tied to a vendor

@@engineerprompt yes, I also tried instructxl embedding and it's better than openai so yeah now I see why I made it so cheap, still not worthwhile when I can run a better model locally for free

@@engineerprompt on data privacy openai has now 0 data retention policy for embedding at least, as a corporate that would need to be applied. But yeah, freedom is the best part. ..... similarly for llm which model open source model I can use?

Seems like we all think a like

What does mean open embeddings ? If I understood well , an embedding is just a vector

you missed reading properly it's not open embeddings. It is openAI's embedding

Embeddings are basically the conversion of the text to a vector representation. These vectors encode semantic (meaning) and syntactic relationships between the words. This is what the model directly uses to create responses using them as context.

@@dimitriosvlamis8696 thanks that's what I understood but what does it mean a technology embedding (open embedding or OpenAI embedding) what are they used for ?

@@brucewayne2480 I am not sure what you refer to as open embedding, but the topic of the video is to show you an alternative way to make embeddings. Usually, if you want to use the gpt3 model, you need to make embeddings with a model from OpenAI and you basically have to convert the PDF to txt and send all the text to OpenAI, and they send you back the vector representation of this text. This can be an issue if you are dealing with sensitive data, that you do not wish OpenAI to collect, for that reason, there are ways to make embeddings locally or with HuggingFace embeddings, and use them with gpt3/4 etc. OpenAI wont have access to the whole document, only parts of the answers it gives since you will do some kind of semantic search and provide the model with very specific context related to your question. Keep in mind, you can not generate embeddings from any model, and use them with OpenAI models.

He means open-source embedding. He's comparing free versions to OpenAI's API version and finding it to actually work well.

But can OpenAI understand alternative embeddings?

Its not being fed the embeddings but the associated documents.

Please, get a cheap lavalier microphone. Your video quality will shoot up instantly and a lot of us will hear you better :)

Discord Pleaseeeee 🙏🙏🙏🙏🙏🙏

Hey Mr. if you did not use alternate to openai why did you write that as title. Totally misleading!

Stop moving your mouse all the time!

WARNING:langchain.embeddings.openai:Retrying langchain.embeddings.openai.embed_with_retry.._embed_with_retry in 4.0 seconds as it raised RateLimitError: You exceeded your current quota, please check your plan and billing details..

im getting this error

Did you find any solution to this?