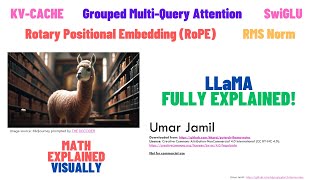

Coding LLaMA 2 from scratch in PyTorch - KV Cache, Grouped Query Attention, Rotary PE, RMSNorm

HTML-код

- Опубликовано: 31 июл 2024

- Full coding of LLaMA 2 from scratch, with full explanation, including Rotary Positional Embedding, RMS Normalization, Multi-Query Attention, KV Cache, Grouped Query Attention (GQA), the SwiGLU Activation function and more!

I explain the most used inference methods: Greedy, Beam Search, Temperature Scaling, Random Sampling, Top K, Top P

I also explain the math behind the Rotary Positional Embedding, with step by step proofs.

Repository with PDF slides: github.com/hkproj/pytorch-llama

Download the weights from: github.com/facebookresearch/l...

Prerequisites:

1) Transformer explained: • Attention is all you n...

2) LLaMA explained: • LLaMA explained: KV-Ca...

Chapters

00:00:00 - Introduction

00:01:20 - LLaMA Architecture

00:03:14 - Embeddings

00:05:22 - Coding the Transformer

00:19:55 - Rotary Positional Embedding

01:03:50 - RMS Normalization

01:11:13 - Encoder Layer

01:16:50 - Self Attention with KV Cache

01:29:12 - Grouped Query Attention

01:34:14 - Coding the Self Attention

02:01:40 - Feed Forward Layer with SwiGLU

02:08:50 - Model weights loading

02:21:26 - Inference strategies

02:25:15 - Greedy Strategy

02:27:28 - Beam Search

02:31:13 - Temperature

02:32:52 - Random Sampling

02:34:27 - Top K

02:37:03 - Top P

02:38:59 - Coding the Inference  Наука

Наука

would love to see lighterweight llms trained on custom datasets, thanks for the video! this channel is a gold mine.

Very excited for this!!! Weekend is going to be fun!

Thanks for explaining all of these concepts. Keep up the good work 😎

Marked for my next watch. Thanks for producing high quality video for the series. Hope you have fun in China.

Thank you for such a detailed analysis of the architecture and implementation features of the model! You are very good at presenting information!

Very good video. You have a knack for conveying complex content in understandable format. Thank you and keep up the great work

Haven't watched the full video yet but thanks for the promising content. please keep it going.

Would like to see more of the environment set up and the debugging process.

You are a hidden gem, great explanation with theoretical and technical concepts.

Highly recommended for anyone who wants to understand open source LLM inside and out.

No comments.... Need to learn many things... Thank you very much for creating such interesting and helpful content...

I am fortunate - that I found your channel.

Thank you so much for sharing this, it was really well done!

Great content as usual! Thanks

Amazing work Umar.

Incredible explanation!

Thank you so much for sharing!

Great video very educational

Thanks! I learned a lot from your excellent video.

Thank you very much for your efforts

EXCELENT! I would like to see the se series with Llava.

Thanks! I learned a lot from your excellent video.

this is hardcore machine learning engineering!

great content !

Thanks for the amazing tutorial! As a student I found it so clear to follow. Just a minor issue to point out, during the illustration of the RoPE, the efficient computing equation for complex frequencies (Eq. 34 in the original paper) should have the third matrix's last two terms $-x_{d}$ and then $x_{d-1}$. The video shows $-x_{d-1}$ and then $x_{d}$, probably just a typo reversing the order of suffixes.

Might you consider creating a Discord guild? I'd love to hang with the people that are watching these videos!

Hi! I am considering it, will let you know with a public post when it's online 🤖🦾

Yep, such great people

Great idea man!!

This is the way!

awesome work boss

Thanks! 谢谢你!

Great Content

Umar bhai, your tutorials on transformer architectures and open-source LLMs are truly remarkable. As a Pakistani, seeing your expertise in deep learning is incredibly inspiring. Have you ever considered creating Urdu versions of your content? It could make your valuable knowledge more accessible to a wider audience. Your contributions are invaluable to the global tech community. Keep up the fantastic work! Huge fan of your work. May ALLAH bless you with health and success!

Wow. Now I got this trick

55:44 "I could have also written the code and not tell you and not tell you anything but I like to give proof to what i do " Wow thank you for going that extra mile we really appreciate it.

oh boy this amazing video

Thank you Umar very much for the efforts here. One question, is there any PPO and finetuning on above of this in next videos?

great video❤

its an honor to me, to be in those 23500 viewers who watched this video, thank you so much umar jamil for your content

As always the PDF slides and the source code are available on GitHub: github.com/hkproj/pytorch-llama/

Prerequisites:

1) Transformer explained: ruclips.net/video/bCz4OMemCcA/видео.html

2) LLaMA explained: ruclips.net/video/Mn_9W1nCFLo/видео.html

amazing

Thanks for your lecture, and I have a question. What happens if the start_pos is longer than the query cache size? If this code is not dealing with such a situation, which kind of additional modification do we need?

Hi, I want to fine tune the model. In that case, will it be required to get rid of the k-v caching?

why is the context window size limited? Is it because these models are based on transformers and for a given transformer architecture, long distance semantic relationship detection will be bounded by the number of words/context length ?

anyone know how to execute the code on cuda 4090gpu , i faced the out of memoery error

Can somebody help to explain why when calculating theta, we are not including the -2, e.g., theta = theta ** (-2 * theta_numerator / head_dim)

Great video

So what about the dataset used in this video?

thank you

I tried loading the model from M1 mac 8GB RAM but it seems that it requires more memory (I am guessing 28GB RAM)

Can i use llama2 model open source for life time or can i code along with you and use the model

Lets say a llm application has a context window of 4000 words. It also supports historical chats. So user can effectively send more than allowed words in a given prompt, and yet get answers related to the previous historical conversation? How does this work ?

Thanks!

Thank you Diego for your support!

Please can we get the training code too?

Thanks

Thank you for your support!

🎉🎉

What are the system requirements to run the inference for this model? By the way, its a great video

Wouldn't it be 'cur_pos - 1' for start_pos argument (line 81 in inference.py, 2:45:58)?

Agreed.

Do you’ve a discord channel

watch again

where do you apply the causal mask?

and the sliding window attention. Thank you

causal mask is not needed since kv cache is used

A suggestion for all your videos is to increase the font size or the zoom level. They are kind of unreadable.

Thanks for your feedback! I'll keep that in mind 🤗

is llama 2 encoder only or decoder only model ?

People call it "Decoder-Only", because it resembles the Decoder of the Transformer, but it lacks the Cross Attention. Technically it's the Encoder of the Transformer plus a Linear Layer and Softmax. But commonly, people call LLaMA a "decoder only" and BERT a "Encoder only" model.

@@umarjamilai Thanks a lot for your prompt reply. And amazing video

please do mistral

We need one more video to explain download weights and inferencing, because it is not clear.

Hi! To download the LLaMA weights, you need to request access to it by using the following link: ai.meta.com/resources/models-and-libraries/llama-downloads/

Meta will send you an email with the details on how to download the model.

Great video! one question though: In ruclips.net/video/oM4VmoabDAI/видео.htmlsi=TBFoV5Kj0lnbNaee&t=4272, Why do we have to have the "gamma" of all one? I did comparison on the code with and without the self.weight, the outputs are the same

Oh forgive me dummy question - for anyone else who's thinking about it, the self.weight is learnable

Thank you for the wonderful lecture. I wondering why you use torch.matmul / transpose things in the video, but use torch.einsum in the slides? They are mathematically equal, but how about their efficiency, which one will be run faster?

Thanks!

谢谢你!