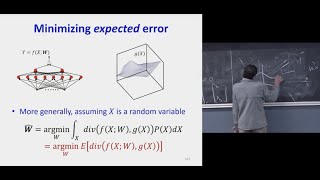

Lecture 2 | The Universal Approximation Theorem

HTML-код

- Опубликовано: 29 авг 2019

- Carnegie Mellon University

Course: 11-785, Intro to Deep Learning

Offering: Fall 2019

For more information, please visit: deeplearning.cs.cmu.edu/

Contents:

• Neural Networks as Universal Approximators  Кино

Кино

Best lecture on Deep Learning.

Professor, you are so awesome 🙏

Outstanding lecture, thank you!

a great explanation...Thank you so much

Very nice lecture. I feel I understand better why neural networks work.

Couldn't help but think of 3B1B videos on hamming codes watching this.

Agreed! Good Lecture!

very delightful lecture

Thanks

Great Lecture, thank you..

Amazing

really nice systematic lecture!

Such a cool explanation. Can anyone (in particular any student from this course) provide a link to a mathematical explanation behind the content from 35:00 till 45:00. Usually lecturers do provide references to such material. Please do not share the reference papers already listed in this video.

Thank You 😊

A great and very clear lecture. Thank you.

At 15:25, isn't the total input coming L-N if first L inputs are 0 and last N-L inputs 1?

agree

Think about it this way. Let a = L- N. The threshold to be crossed will be (a + 1) then. If you Increase a positive in a ( by making any one of the L = 1) or remove a negative from a ( by making any one of the N = 0) then only you will be able to touch the decision boundary. And thus the neuron will fire.

can any one explain the inequality in 41:30

and the equation in 42:00

thanks

ad infinitum ...

Is this a undergrad level course or grad level course?

_"11-785 is a graduate course worth 12 units."_

grad level course

Why are students so unresponsive in a grad level course?

@@sansin-dev their brains are frying

This guy is confusing. No good explanations. I have doubts about the two circle one hidden layer solution. He nees an OP operation as a third layer, otherwise also other regions outside of the two cicles will be above the threshold.

这个老师的脖子是歪的