Expectation Maximization: how it works

HTML-код

- Опубликовано: 4 окт 2024

- Full lecture: bit.ly/EM-alg

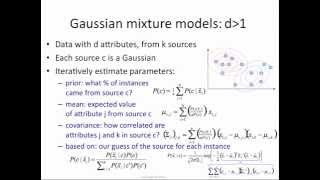

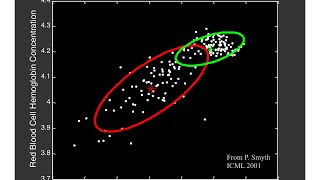

We run through a couple of iterations of the EM algorithm for a mixture model with two univariate Gaussians. We initialise the Gaussian means and variances with random values, then compute the posterior probabilities for each data point, and use the posteriors to re-estimate the means and variances.

Man, this guy is unbelievable! I wish I had professors like him! Great explanation, thanks!

God, this is so interesting and now makes sense. I wish you were my data-mining lecturer! You should look into getting with khan academy!

You have no idea how good this presentation was. Ive searched the web for hours. Nobody could explain this, except your video! Thankyou

What an excellent explanation! As soon as I pulled a face trying to figure out what the new mean estimation was doing, you stopped and explained it and realised how unusual it might look at first. So many teachers lack this ability to go beyond what they know and imagine how certain formulas/concepts might need an extra minute or two of explanation for people who haven't seen it before. Subscribed!

Thank you! Very happy you find my videos helpful.

This video has (by far) the highest knowledge/time of any other video on this topic on RUclips. Clear explanation of the math and the iterative method, along with analogy to the simpler algorithm (k-means). Thanks Victor!

Very nice explanation. But need to see your pointing..

This explanation is fantastic! I have been studying machine learning courses in my master's but always found it difficult to understand. Now I finally understand EM. Thank you Prof. Lavrenko.

One lecture like this can uncomplicate things so much to so many people around the world. After understanding this 1D it is so much easy to get a grasp of higher dimensions.

Dear Victor

Your machine learning tutorial videos are really great!

I want professors like you in my college. You're so great sir thank you so much ❤️ for your great explanation ☺️. Your video's making easy machine learning easy

You just cleared every doubts on this topic, it's 10 days before my exam watching your video and getting everything cleared

So helpful. Really lucky to have found this goldmine!!

so simple explanation and at the same time so comprehensive. this video really help me to understanding this algorithem

That was very good explained! It was nice that you often referred and compared to K-means. That made it easy to understand this algorithm! Thank you!

So easily explained with superb clarity.

Splendid. Example very well portrays the algorithm stepwise!

Lucid explanation on EM. Mr Lavrenko is a superb teacher, explaining concepts using understandable language and helps learner to make sense of the equations.

Keep up the good work.

Great Explanation Sir. I don't know why it motivated me to appreciate and comment on the video.

Incredible, I read a lot of article and papers around EM. but this video gave me everything I need to know !

you really mastered the technique of explaining big things to a beginer.it was very helpful.I am definitely going to follow your lectures in future.Thank you so much for the knowledge.

OMG this is such simple and intuitive explanation. THANKS !

Wow, you explain very well, thank you! I was having a hard time understanding my professor's explanation in our class.

You are the best teacher I ever had. Thank you so much.

best explanation I've ever seen

annotations really helped understand better.

Thanx man

I can't thank you enough! You explain it so well, that i can now understand what the formuals in the script mean, i have to learn.

Brilliantly done, hats off.

Awesome!! Obviously you are a teacher who knows the art of teaching! Thank you!

I have tried to find good explanation on GMM for beginners (me) new to this topic and your explenation was the best i found. Very clear and good explanation, im very thankful sir! Keep up the good work!

Amazing explanation! I was struggling to understand this concept. Thank you so much!

Best explanation i've seen about this subject. Thank you very much, your videos are really awesome !

Amazing! Was stuck with the Udacity Course! Now, its all clear! :)

You explain this so well! Awesome!!

You are very good at teaching, you make it looks easy.

This is a perfect practical example, helped clear my concept about EM algorithm!!

You explains really clear. Thanks for saving me from struggling in EM algorithm.

Beautifully explained. Keep it up professor

I wish you were my professor! I keep having to go to your channel after the expensive in-class lectures.

God bless you sir, and your excellent explanations. You are a life saver

Congratulations Sir, magistral EM lecture.

Excellent explanation. Thank you.

Thanks!

great explanation! the animations and the equations on the side, coming at the right time, really helped :)

Thanks a lot, Very well explained,

Thank you!

Best explanation I've seen on the topic!

Please make more!!

This is awesome explanation. Thanks !

This is gold standard

Students that have such a great teacher make me jealous.

what a brilliant explanation

Great explanation mate, thanks!

Amazing explanation!

Probability and likelihood are used interchangeably here. When looking at P(xi | b), given the notation I assume the calculation is indeed a probability. I enjoyed this. I would like to have seen an explanation of stopping at a threshold for convergence.

Excellent! Thanks for the effort to make it easy to understand!

Thanks! Glad to know these videos are useful.

Thank you very much. Excellent Example !!

Thanks!

incredible explanation!

Thanks from Germany! Very very helpfull.

may I ask what is the value for P(b) in Estep, if I have two clusters, does that mean I can use 0.5 as Initial P(b) in estep?

great work Mr. lavrenko!

Very good explanation. Thanks a lot!

Thank you for making this so clear and intuitive! Love your lectures

Great explanation and example! Many thanks!

so what are P(a) and P(b) initially?

Wow! the best explanation ever!

Thanks man. Really really great explanations.

Thank you for creating this video -- very helpful!

Thank you so much for posting this explanation!

Thank you very much. This video helps me a lot.

hello, thanks for this video

at the first iteration what value of p (b) do you use?

Thank you very much for the presentation. I have one stupid question though. During the EM algorithm, when we apply the bayes rule to calculate the posterior p(b|x), how do we know the prior probabilities p(b) and p(a) (which are supposed to be equal)?

I had the same question. Fishing through the comments it seems some have used 0.5 and 0.5 in this two-class example. So I guess our null assumptions would just be that they are equally likely for all classes. Not sure what happens after we go past the initial step. Did you figure out this? Hope this helps!

Thank you for this, very valuable.

Thank you!

I have a question : At 4:16, the formula of calcualting μ_b has the division by (b_1 + b_2 + ... + b_n), but my first idea is division by the number of data points, i.e. n, so why not divided by n ?

Got a question for anyone who can answer! I follow most of the calculations but I'm not sure how we get p(b) in the second equation (application of Bayes' Theorem). Or, for that matter how we calculate p(a) for the denominator. We can't estimate them from prior class membership samples since we haven't labeled anything. Any idea? Am I misunderstanding it?

Thanks! it is great to finally understand

+1. Thanks a lot .. I finally understood EM. And now it makes complete sense :)

Would you mind citing a resource which proves that initial choice of gaussians don't make any difference. And the proof that these gaussians when keep shifting iterationwise how they always converge?. I guess it would be same as K-means, isn't it? Thanks.

Excellent , the only one that i understood :-)

Question : Why does it converge ? Does it has something to do with the central limit theorem?

Excellent! Clean notation, very clear explanation.

One question, are the priors the mean of the Gaussians?

Great Explanation ! Thank you !

Amazing, thank you!

Thank you very much. Well explained !!

Thanks for such a good explanation!

Thank you!

I'm trying to fully connect the theoretical pieces that justify the weighted average of mu_a, mu_b, sigma_a, and sigma_b estimates. I.e., where is the E step and the M step and how do they connect to what's written? *Great* explanation. Thank you for uploading.

wonderful explanation ! thanks a lot .

Great explanation, thank you!

Thank you for the amazing video.

When you refer to a point saying 'that' point there I have no visual indication on the screen.

Added the annotations, hope this helps.

Great explanation!!

Great explanation. I have a question tho, about the values of xi. Why do we need to multiply those values to the posterior probability? What does the values of x really represent, if they are just points on the line why do we need to multiply them in the equation of recalculating the means?

wonderful lecture. But how to determine the number of gaussians(clusters) are behind the data?

Thanks a lot for this

Thank you so much...This helped me a lot!!! Thanks a lot, again!

at [7:34] instead of mu_1 to mu_n it should be mu_a and mu_b for variance calculation if I am not wrong?

yes. and he mentioned the mistake in the video.

Very clear video. Thank you.

Thanks!

Such a nice video! Thanks a lot!

Thank you for the kind words. Good to know you find it useful.

In 2:04 why is the posterior calcluated ? and what is the difference between p(x | a) , p(x |b ) and p( a | x) , p(b |x) ? which of these correponds to the probability that the data point belongs to cluster a /b ?

excellent, thank you

Hi Victor, Thank you a lot for these interesting videos. do you post your slides anywhere ? id love to keep a copy of everything i watch here.

Awesome video !!

Video states that we are aware of the data points coming from 2 gaussians..GMM being unsupervised model, would we have that kind of information(# of Gaussians) beforehand..

It sounded as if you said: "we will discover the Gaussians automagically" :)

very comprehensible explanation of em. but what i dont get how all these probabilities look like if k is k = 3.

thank you so much

Well done!