Lesson 2: Byte Pair Encoding in AI Explained with a Spreadsheet

HTML-–Ї–Њ–і

- –Ю–њ—Г–±–ї–Є–Ї–Њ–≤–∞–љ–Њ: 10 —Д–µ–≤ 2025

- In this tutorial, we delve into the concept of Byte Pair Encoding (BPE) used in AI language processing, employing a practical and accessible tool: the spreadsheet.

This video is part of our series that aims to simplify complex AI concepts using spreadsheets. If you can read a spreadsheet, you can understand the inner workings of modern artificial intelligence.

рЯІ† Who Should Watch:

Individuals interested in AI and natural language processing.

Students and educators in computer science.

Anyone seeking to understand how AI processes language.

рЯ§Ц What You'll Learn:

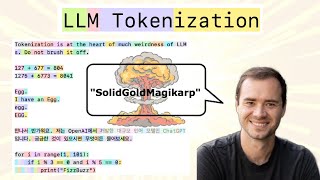

Tokenization Basics: An introduction to how tokenization works in language models like Chat GPT.

Byte Pair Encoding (BPE): Detailed walkthrough of the BPE algorithm, including its learning phase and application in language data tokenization.

Spreadsheet Simulation: A hands-on demonstration of the GPT-2's tokenization process via a spreadsheet model.

Limitations and Alternatives: Discussion on the challenges of BPE and a look at other tokenization methods.

рЯФЧ Resources:

Learn more and download the Excel sheet at spreadsheets-a...

wow! what an explanation. it cannot get better than this. thank you so much.

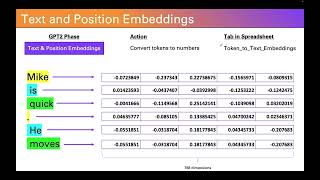

This is amazing! Thank you for making this. Really looking forward to your next video about text and position embeddings

The quality of this video has blown my mind! Really great stuff and looking forward to your next video. Thank you!

seeing this video made me feel I am not even close to say I know excel. Your understanding of concept is really really deep as implementing something like GPT-2 in excel requires one to have thorough understanding of all the concepts. Hats off to you.

This is an incredible video. The way you describe these advanced AI concepts is awesome. I'd love to see more, maybe on the GAN technology that Sora uses

So clear! Great instruction!

Incredibly brilliant. Words fail me. Thank you for sharing this, it helps me enormously in understanding AI.

On a side note: How ingenious can you handle Excel? Unbelievable!

Really cool stuff, it really helps me to understand how the BPE works. Looking forward to your follow-up videos!

Very good explanation, are you going to go into positional embedding?

thank you! yes just haven't had time to get around to it yet. Embeddings will be the next video. Not sure if I'll do token and positional embeddings in the same video or will break it up into two parts.

Large language models and AI in general seems to do a good job of compressing and then turning back into an approximation of the input. Is this a byproduct of nural networks in general or just specific subsets? Could you make a large language model or a lot of purpose built AI that are good for various different compression situations and more often than not perform better than current compression algorithms?

I learned a ton from your video

Very cool! Thanks

great explanations

amazing work. please keep going :D

Great video. How exactly are the scores calculated from the possible pairs? You said a standard filter? Could you explain more?

Why does having a large Embedding Table matter? Can't it just be treated as a lookup into the Table (which should be pretty manageable regardless of size)? Do we really have to perform the actual matrix multiply ?

Why did they include reddit usernames in the training? Considering the early days where people would have extreme/offensive names as a meme, that is just asking for trouble.

promo sm