Spiking Neural Networks for More Efficient AI Algorithms

HTML-код

- Опубликовано: 6 окт 2024

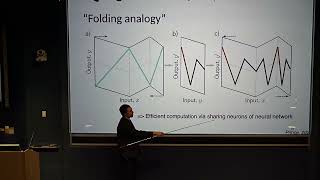

- Spiking neural networks (SNNs) have received little attention from the AI community, although they compute in a fundamentally different -- and more biologically inspired -- manner than standard artificial neural networks (ANNs). This can be partially explained by the lack of hardware that natively supports SNNs. However, several groups have recently released neuromorphic hardware that supports SNNs. I will describe example SNN applications that my group has built that demonstrates superior performance on neuromorphic hardware, compared to ANNs on ANN accelerators. I will also discuss new algorithms that outperform standard RNNs (including GRUs, LSTMs, etc.) in both spiking and non-spiking applications.

Speaker Bio:

Professor Chris Eliasmith is currently Director of the Centre for Theoretical Neuroscience at the University of Waterloo and holds a Canada Research Chair in Theoretical Neuroscience. He has authored or co-authored two books and over 90 publications in philosophy, psychology, neuroscience, computer science, and engineering. His book, 'How to build a brain' (Oxford, 2013), describes the Semantic Pointer Architecture for constructing large-scale brain models. His team built what is currently the world's largest functional brain model, 'Spaun,' for which he received the coveted NSERC Polanyi Prize. In addition, he is an expert on neuromorphic computation, writing algorithms for, and designing, brain-like hardware. His team has shown state-of-the-art efficiency on neuromorphic platforms for deep learning, adaptive control, and a variety of other applications.

![Eminem - Temporary (feat. Skylar Grey) [Official Music Video]](http://i.ytimg.com/vi/ZaK9Wi5ho0o/mqdefault.jpg)

This is the single most important problem in ML right now. Data uncertainty and lack of generalizing power makes traditional ML brittle. OL is done at the data-pipeline level rather than intrinsically in the model, which won't scale or get us closer to AGI. In the future we'll look back at OL pipelines and see them as primitive. A sound basis of AI must incorporate time/OL, which is something traditional ANNs ignore as they are stationary solutions. ANNs need to be re-evaluated from first-principles where time/OL are baked in. Time-dependent networks like spike/phase oscillators are a promising way forward if time/OL is intrinsic, but the ML community has been seduced by traditional ANNs.

Interestingly just came across these clip where Hopfield he talks about the limitation of offline-first networks, and what I perceive as a flaw in simple feed-forward ANN design. Ideas behind Hopfield networks are extremely fascinating. Hopfield also touched on emergent large-scale oscillatory behavior in this talk. There are differential equations that can be used to study this (Kuramoto).

ruclips.net/video/DKyzcbNr8WE/видео.html

ruclips.net/video/DKyzcbNr8WE/видео.html

ruclips.net/video/DKyzcbNr8WE/видео.html

Very excited for the future of hardware!

I always thought of the new types of hardware that perform the task similarly to Brain. This exciting and wonderful talk gave me the impression that my thinking wasn't out of context. Looking forward to hearing more about it.

You're doing absolutely amazing work here. Can you point me to a simple example or information about how to perform online learning in a spiking neural network?

I believe the Nengo package that Prof Eliasmith mentioned is capable of doing online training. Training with spiking neuron is tricky though as back propagation is not usually available (I guess you can still do it with back prop if you are working under rate code). The only rule (biologically feasible) that I know is PES.

@@solaweng do you think maybe they're using hebian learning? Thanks for responding I was waiting 5 months for that. :-)

@@BrianMantel I just checked the new nengo doc and seems it has different learning rule now. The Oja learning rule has something to do with Hebbian coactivity so I guess the answer is yes. You can check it out here www.nengo.ai/nengo/examples/learning/learn-unsupervised.html

Truly, a fascinating talk! I enjoyed it.

Great Video Intro for our research fellows starting hardware based spike neural network research in MYWAI Labs

Very good lecture. Was looking for material for my master's thesis, and I found lots of interesting pointers.

what is your master thesis? if you don't mind.

I'm still discussing with my supervisors, so I cannot say yet. But I can come back to comment here once I know 😄

@@postnubilaphoebus96 good luck with it 😁

Thanks! The project also only starts around January, so there's still some time.

@@postnubilaphoebus96 Do let me know about it. 😁 Btw, I have a BCI mindmap, the link is available in the description of my RUclips channel. I recently started to add some stuff to its computational neuroscience section of it.

everytime someone says "compute" instead of computation or computing, I say to myself "life, liberty and the pursuit of happy"

great lecture!

great work...

I wonder how SNNs on Loihi compare to just an iteratively pruned ANN on something like EIE from Han et al. (2016). Is it mainly the fact that it’s a sparse network and hardware for it that give it good performance with less energy vs GPU? Or is the benefit to efficiency more from spikes and asynchrony?

SO helpful. Thank you so much! Other than the math part (which I fear I may possibly have fallen asleep for), everything made sense to me except for the part about your shirt. hmmmm. 🙃

I am wondering what is the training algorithm used for these networks? Does backpropagation work with SNNs ?

It's weird it seems like there are parallels between LMU and Hippo.

Amazing talk. Absolutely no way it's free.

If this is all true, why aren’t everyone working on neuromorphic computing and SNNs?? This is really confusing to me, because I expect that researchers would all turn towards this research area if they knew it to be accurate.

Because I don't think neuromorphic chips are easily debuggable since neurons would become physical. Also, any use of backpropagation (which is the industry's focus right now) destroys the purpose of spiking neural networks in the first place IMO; like how are you gonna calculate a gradient for neurons that have synapsis looping? The only choice is to probably not have looping which renders SNNs further and further away from how the brain actually works. It worth nothing that the brain doesn't use backpropagation.

Because to get traction one must be viable in the industry as well

is it possible to download the slides somewhere?

thanks for the video!

48:50 that aged well

This is fucking amazing and I really want to do research on it

I came here to see spiking networks then he finishes by presenting LMUs. Like how is that even related

LMUs (unlike many other RNNs esp. LSTMs etc) run well on spiking hardware.

Sir, i want to write a research paper on Spiking neural networks. Would you please suggest me some of the applications, that would makeit easier to choose a field in this particular concept.

For a ML model to generalize like humans do, it has to efficiently update its weights during inference. This does seem like a very promising direction.

Klllllllllll