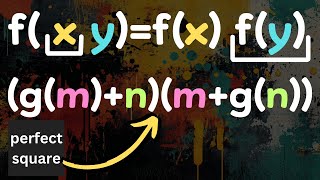

when three triangle is one square

HTML-код

- Опубликовано: 17 ноя 2024

- 🌟Support the channel🌟

Patreon: / michaelpennmath

Channel Membership: / @michaelpennmath

Merch: teespring.com/...

My amazon shop: www.amazon.com...

🟢 Discord: / discord

🌟my other channels🌟

mathmajor: / @mathmajor

pennpav podcast: / @thepennpavpodcast7878

🌟My Links🌟

Personal Website: www.michael-pen...

Instagram: / melp2718

Twitter: / michaelpennmath

Randolph College Math: www.randolphcol...

Research Gate profile: www.researchga...

Google Scholar profile: scholar.google...

🌟How I make Thumbnails🌟

Canva: partner.canva....

Color Pallet: coolors.co/?re...

🌟Suggest a problem🌟

forms.gle/ea7P...

this solution is really just "here is a function that constructs solutions, qed". i would be much more interested in how to derive this function.

Maybe affine function necessitates the form Mv+b so matrix M and vector b was found through initial conditions through exploration along the triangle numbers.

@@akirakato1293 "Maybe affine function necessitates the form Mv+b"

Yes, that's the very definition of an affine function. However, it's not clear at all why one even _should_ get a new solution by applying an affine function to another solution, that was not motivated at all.

@@bjornfeuerbacher5514I mean there are some things in mathematics that motivation doesn t come from this specific problem but by a different problem-theorem-teqnique in this case for example do you know of infinite decent in that case you essentially apply a procedure to a known solution of the problem to get another solution of the problem aka a function to arrive from one solution to another recursively what you do here is try to find a function that takes as input a vector-solut ion and get another solution that is linear (for the sake of simplicity if such a function cannot exists then you try polynomial inputs in x,y coordinates and so on) in terms of the two solutions in x and y coordinates then you apply it back to the equation to try and find the constants that create such a function after that you try to conjecture having found the smallest solutions that some create a string of recursive applying of that function to the main solutions that you found and the next steps flow naturally

@@bjornfeuerbacher5514 i'm not sure it's "should", so much as "it would be convenient if one existed", and then someone went looking and found one?

i'm not sure how to reverse-engineer the process though. *if* some affine function F(n, a) = (xn+ya+z, in+ja+k) in ℕ exists, then plugging those into the relation will reduce back to the relation, as shown in the video. so the obvious dumb thing is to plug those in compare coefficients (which gives you five equations in six variables), but you get a ghastly mess along the way, and then you still have to find a solution in ℕ.

maybe there's some clever way to skip the algebra and turn this into a matrix equation directly? but then you still want natural coefficients.

i swear i've seen something like this done before, but i can't remember where. seems like a fascinating topic but i don't even know where to begin looking into it

For T_{n-1} + T_n, there's a classic demonstration : imagine a n x n matrix. T_n represents the elements of the upper (or lower...) triangular matrix and T_{n-1} is the lower strictly triangular matrix. The sum of the 2 is the complete n x n matrix.

Did I miss something? I must have done. If we replaced the set of all v with just, say, (0,1) and the list that follows (0,1) is the only thing we consider, then can't we equivalently prove that there's no smallest element not contained in the list spawned by (0,1)? I must have glossed over some kind of simple thing, I'm sure, but I can't see what.

I agree with you, I think that what is missing from this proof is to show that (n0,a0) are non negative (otherwise they are not solutions) this can be done by proving that a8, which also means I would have to check all solutions for n

We also need n>1 because otherwise you get a negative for n after applying the inverse.

You need to make a few more assumptions to proof this, like showing a > 0 ( which he previously mentioned) and a < n /4 (excluding small cases which can be checked manually) so that n0 and n1 are both still positive.

This is why edge cases and conditions are important to consider. Glossing over the details like in this video leads to issues like this, which are easily resolved by making sure we know the domains of all things involved, and being careful about when certain things hold. For example, stating that the n should be non-negative is a fair thing to do (but not required, and if you don't require it, then you're indeed right, and the infinite descent truly is infinite, I think), and in this case, you can, after some effort I cannot be bothered to put in, show that inverting some solution not part of the 2 chains will yield a smaller one also not on the chains (this bit's easy), so if you pick the solution of smallest n not on the chains (this n must exist by the well-ordering principle), then invert it, you get a smaller one. But... can we be sure it's also a valid solution? We need to be sure that our n stays non-negative...

Etc. There seems to be a lot of housekeeping being swept under the rug, in short. I can hardly blame him, I don't particularly want to do it either.

Especially so, given that I'd be far more interested in an argument that there are no solutions lying outside either of the 2 infinite descent/ascent chains.

Ah, I missed something rather obvious. You can indeed safely discard negative n, since solutions with negative n can be turned into those with positive n anyways (I'll leave why as a trivial exercise), and there's a correspondence between the two such that we can account for all solutions by only considering non-negative n. But now the argument for minimality is probably more sound. I also missed something else obvious. Each n can only have 1 solution associated with it. This makes the descent argument much easier.

I'm like a catch watching tennis. I don't understand it but it's entertaining.

Regarding the "smallest solution not on the list", shouldn't you account for the possibility that you could find - theoretically - an infinite list of solutions also with negative n? If so, there is no smallest solution anyway.

I don't claim there are more solutions, just that that is a loose end in the proof; or what did I miss?

Note that T(-n) = T(n - 1). So there are no new solutions from negatives and we might as well consider only nonnegative n.

@@TheEternalVortex42 Oh good point! No idea how that slipped by me. Should have been mentioned, though.

I'd love to see a video about Pell's equation

19:18

Another way of saying 3*Tn+1 is a perfect square, only when n = 0, 1, 6, 15, etc.

a is positive otherwise n0 is not forced to be less than n

a is positive because

(n+a)²=T_{n-1}+T_{n}+T_{n+1}

=n²+T_{n+1}>n²→a>0

The affine function seems to comming out of nowhere, or am I missing something?

6:55 You totally lost me. Where did this function come from? How would one know that particular function was useful? What motivated turning to that?

T_(n-1)+T_n+T_n=1 = 1+3+5....+(2(n+a)-1) = (n+a)^2

A fun point on an ellipse is x = (a×sqrt(3))/2

While this is a very interesting method of proof, and a nice application of a descent-like argument, without any motivation for the choice of affine function, it's not too useful to know...

3 x 😮 = 😄 ?

I consider the icon and the triangle, click bait, plus lately. These videos haven’t been very entertaining. Way too much like going to school.

Never been this early 😂😂