Support Vector Machines (SVM) - Part 1 - Linear Support Vector Machines

HTML-код

- Опубликовано: 1 окт 2024

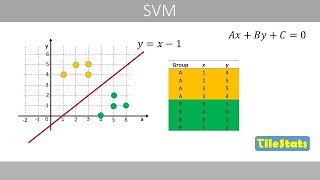

- In this lesson we look at Support Vector Machine (SVM) algorithms which are used in Classification.

Support Vector Machine (SVM) Part 2: Non Linear SVM • Non Linear Support Vec...

Videos on Neural Networks

Part 1: • Artificial Neural Netw... (Single Layer Perceptrons)

Part 2: • Artificial Neural Netw... (Multi Layer Perceptrons)

Part 3: • Artificial Neural Netw... (Backpropagation)

More Free Video Books: scholastic-vide...

The fundamental question is still unanswered by every tutor I have found so far. The question is how to assume/specify/determine the support vectors? Iy is of course unbelievable to assume support vectors just by visualizing the data points. How can it be true in multi-dimensional space?

Please guide me. I need to program SVMs from scratch without using any library in Matlab or .Net.

As per my understanding I guess support vector/s is/are one which is on the hyper-plane so they will first create one plane in between two datasets and then whichever point lies on that plane's equation will be called a Support vector.

The main problem is to find the support vectors.

You must give the general algorithm to find the support vectors !!!

In algorithm we dont have visual in front of us

Please explain me how do you calculate the support vectors from data set or how do you determine the support vectors from the data set?

This was great please provide more solutions on Naive Bayes, Linear and Logistics Regression very helpful

Great simplified explanation but, at about 18 mins, when describing the 4 support vector version, why is S4 = [310] and not = [311]? many thanks

Thanks. It eases out the terminology kernel, Lagrangian multiplier, vector algebra, etc. It the procedure appears to be working on trivial cases. I've tried with few points but it doesn't seem to be yielding correct results.

at 13:46 if w=(1,0) then why it's vertical line ???

any body tell me how we find the valued of alpha's?

amazing! :)))) LOved it.. didnt find any other content like this..

why did you choose the bias to be 1 ?

you can choose any other positive constant too...it doesn't effect much,so we choose the simpler term...

10000000000 thank's. it's very useful

Can you please explain where that equation a1S1S1 + a2S2S2 etc was derived from? I haven't found anyone who could explain that

I have one confusion regarding offset. Why are you changing the sign of offset?

Only a few special cases are mentioned where the solution is a line parallel to either the x or the y axis. I don't know if this solution works for other more general cases. Actually I think the solution form is not correct, not the right formulation if you derive the optimization solution from KKT. The w vector is the linear combination of support vectors, but the augmented w vector is not the linear combination of augmented support vectors. At least I think so now, and I can't prove that they are equivalent, so I think the solution provided in this video is wrong. It just happens to work for those special cases in the video. If someone can prove that this solution in the video is correct, please correct me.

The correct solution is from the MIT video here:

ruclips.net/video/_PwhiWxHK8o/видео.html

How did you determine that there will be only three support vectors? Why not 4 or 2 ? And what will you do when you don't know what vectors are support vectors? How will you proceed?

How do you mathematically find the support vectors?

Thank you so much for this explanation. It almost cleared my 80% concept...

Part 1 is great. Could you please upload part 2 also. I would be very thankful to you.

why u added the bias 1?

I see that: classifying these new points must use the bias offset as follow :

W.X+b ... is this effect on the final result?.

Please make video how we calculate alpha values of each sample in trainig set.

sir u took 1 and -1 because there are two classes, suppose if we have three classes then what values we should take

1. why add bias in the first place?

2. why is the bias 1?

the equation I found for w is multiplied by yi output. Why it is not considered here

At 2:16, could you explain how to select the support vector S1, S2, S3 in computer approach? We can recognized it by our observation, but automatically, I think we should have a way to define which points should be consider the support vectors among many data points.

did you find any answer to this elsewhere? Even I have this doubt. Can you help please?

i hope this will end my thesis revision 😭😭😭

It's Tilda not Tidle. Good video

The vector should be horizontal by w=(1,0)

Why does w={1,0} mean a vertical line and {0,1} mean a horizontal line. When defining first support vector S1, it was represented as S1={2,1} because it lied at (2,1) on the coordinate axis. Shouldn't the same interpretation be applied to w={1,0} , meaning thereby that the straight line passes through (1,0) on the coordinate axis?

Did you ever get an answer to this?

How do you draw the boundary from W? You mention W(3) is the bias but what to W(1) and W(2) represent?

Great video. But I do have a question...how did you get x2=1 for w=[0,1,-1]. I understand the 1 but not the x2. Thank you.

Nevermind....I get what you mean by x2.

Best explanation..Very useful. Can you please tell me how to plot support vector in text classification..

Why bias has been taken as 1? Will not the result change if bias is changed?

i have the same question, do you find the answer?

Permission to Learn Sir

When using four support vectors, the s4 point has a bias of zero; was that intentional or accidental?

Is this how you compute the canonical weights?

Excellent !

This Video is really helpful to my teacher, she knows nothing but copying the notes from youtube...So if you are watching my comment -"Sudhar Jao"

Thanks sir

nice explanation... thanks bro.....

i know the support vectors are the one closest to the class boundary. this is because it is still observable in 2 dimension space. if it is in 4,5 or 6 dimention space, how do you find the support vectors?

if there will be more than 4 support vector then how we will solve the equation manually?

U can use matrix index or linear programming i think

Why is the need to augment the vectors? Isnt augmentation usually done to project point to a higher dimension to make points linearly separable? The points are separable without augmentation.

Could you also tell how we get the 3 equations in 6:19?

+Shubham Rathi I think he just does it to simplify the expression +b to just , where x_0=1 and w_0=b.

+TheGr0eg You're right :)

How did he get the 3 equations in 6:16?

+Shubham Rathi He does not derive them. They are basically the result of the mathematical theory of SVM that he does not go into here. I don't really feel comfortable explaining that though because I have not yet understood it completely myself yet.

thanks for the video.. is there Part II?

thanks, the best lesson I found

Thoda jor se bologe toh achha lagega

Superb explanation !!!

please explain that how can i compute ᾱ1,ᾱ2= -3.25,ᾱ3=3.5 ???????

Your question refers to time stamp 9:30 in the video > These are 3 simultaneous equations. First remove alpha1: Eq 1 x 2 - Eq 2 x 3. And Eq 2 x 9 - Eq 3 x 4. Then use the resulting two equations to get rid of alpha2 to find alpha3. Then substitute alpha3 to find alpha 2 and then alpha 1. Or simply use Cramer's rule www.purplemath.com/modules/cramers.htm. Hope you got it.

homevideotutor Thank you so much

homevideotutor sir, can you please write a little about how to select the support vectors in the beginning to begin with? Like you selected three support vectors, how did you select them? Can we pick random vectors from each class and consider them as our support vectors?

They are the closest points to the class boundary.

how can you determine the class boundary?

i'll to thank you

www.scientific.net/AMM.534.137

Great Video! I would like to suggest you to not to speak so softly. Its really difficult sometimes to get what you said. :p

Thanks! :D