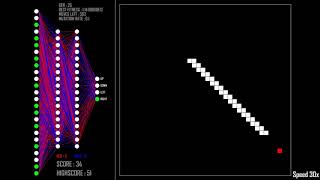

AI Learns GridWorld Using Pixels| Double DQN + Experience Replay

HTML-код

- Опубликовано: 3 окт 2024

- This is an AI I created based off DeepMind's paper. It uses double DQN networks with experience replay to learn to play GridWorld from just the pixels on screen.

Code: github.com/Chr...

Twitter (soon): / chrispressoyt

I wanted to start using Unity to make games so this was a good intro to that for me. The AI portion is entirely in Python which made it so I could use the Unity ML API to interface with the Unity game I created.

Thank you, that is the best explanation of deep q learning that I have seen.

Thanks :) I often find that the algorithms behind AI aren't too complicated if you can get past the math syntax. The really nice thing is once you start understanding a few of them, the others will start to make more sense.

Awesome! I really like the further explanation

Glad you liked it! Thanks for the feedback!

Three is so much good content on this video, and it's crazy to think what Deep q can do, it's a pity it didn't go as well as you other AI videos.

Anyway, great content, hope you're planning to make some other video in the future :)

This is really cool, I am glad I found your channel!

Welcome aboard!

@@Chrispresso Me too!

@Chrispresso, you're one of the best! Those who can program artificial intelligence, machine learning, and neural networks I consider to be geniuses! ;)

I demand more AI! (How about having 2 agents battle to the death?)

AI Power!

That is another option!

Thanks for the video. I'd love to see the AI start to learn some more advanced games. It's really interesting when novel techniques emerge such as the wall jumping in Mario Bros. I suppose a similar approach could be applied to newer Pokemon games, Minecraft, or Mario 64.

More to come! Don't you worry...

Wow! I love this video!. Im a bit scared to use custom rl algorithms in ml agents python api. Would you give me some advice? or do you plan to show it in more depth? Thank you for your videos. I love your work! :)

Thanks :) What scares you about it? If there is enough demand I can definitely show more in depth of how some of the code works. I just want to make sure that in general people can still understand what is going on, which is why I don't generally show code. If code is of interest I can make it more modular and show specific sections that I think are important. I also plan to do some larger projects and use a lot more with ml agents API that isn't covered here (channels specifically).

Radical

Thanks for making great videos!

Great video! Are you planning to go more in-depth into the Python training/testing code? Also quick question - for this specific case, did you pass all RGB layers into the convolution network or simply convert the source image to grayscale and use 1 layer? It seems with RBG it would just learn that red = bad, white = good from the color data, but if it was grayscale it would actually have to learn the shapes (circle vs cross).

I was thinking about showing some of the code and doing a quick explanation of how some things work, i.e. the experience replay or what the network looks like but wasn't sure if that was of interest to people. In terms of the layers, I did use RGB, but I also included a light source in Unity to cause shadows and used an X (cross) so that it would have gaps of red/gray to help prevent it from just learning colors. I tested it with using grayscale but it was a similar result.

@@Chrispresso Interesting, thanks! Can't wait to see more videos like this.

Awesome videos Chris!

After learning Python basics what would you recommend learning next for a beginner to work towards understanding how to setup and train a.i to play games like your videos?

Just practice, practice, practice. The more you do at anything the better you become. If you're interested in specifics of AI/ML stuff you can pick up books on the math behind it (if you care). Otherwise if you care more about the application side, TensorFlow and Pytorch both have good tutorials you can check out (www.tensorflow.org/tutorials/quickstart/beginner and pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html)

@@Chrispresso Awesome! Thanks!

Really cool

I like this

Could you do a really dumbed-down explanation of the DeepQ learning, for thos who do not understand the mathematical notation? I’d like to implement it myself but I just don’t get how it should work.

Ya I think I could do that. I was toying with the idea of doing another series explaining these algorithms at a very elementary level.

I'm your 1000th sub

Nice! Thank you for the support :D

@@Chrispresso this guy should get something like reward... You will remember this moment in future.... But I'm your first dislike tho.. just to be first in something

This channel is love at first sight

great

Did this channel die? Please come back.

It did not die haha. I've been working on several AI projects but haven't gotten them to a state that I'd like them to be for a video.

do you write the programs from scratch or do you use a ai gym?

I just don't understand how to calculate the potential reward for every step. You said that the reward gets lower when it takes a longer time to get to the goal, but how do you implement this in rewarding one action? And how do you implement the distance/amount of steps from the goal into potential rewards for single actions?

Each timestep it get a small negative reward. When it hits a goal it gets +1 and if it hits the fire it gets -1. You can use the old network for calculating what it thinks it will get. The network acts as a function approximator and selects an action based off the result. Then as one approximator updates and improves, the other will eventually update as well.

@@Chrispresso So if a step is part of a path that leads to the goal, it will get a small reward? Because not every step leads to a goal, but can be part of reaching the goal. And, if so, how do you determine how much of an reward each step gets?

i like it

Thanks!

Dead Channel??? Shame, it was good

To say it up front I have no experience with AI.

If someone had a powerful enough computer could this AI be effective at making the ultimate Super smash bros ultimate AI ? Or would a Ai that learns by watching gameplay better for the Job? Or would a completly different type of AI be better suited for the application?

I wouldn't use DQN for smash, but you could do something similar. It could definitely learn from playing. If you placed the AI against a level 9 com it would at least have a challenging opponent to start with. That would make it so it doesn't need to watch gameplay. Watching gameplay is definitely an option as well though. Things like AlphaZero "watched" gameplay of end game moves for Go to make it better at finishing the game strong. But lots of different techniques can be used for things like smash and I've been considering trying out smash for N64 to start testing!

@@Chrispresso awsome

Pls more

i got a crazy idea. (i know the issues about this and i know for a fact that no one is Crazy enough to do this xD cause it will ruin the FPS Community but would be hilariously Evil to see).

and AI that Can play 3D FPS Shooters (Yes the Guns ones xD)

im a little bit Greedy and Ambitious xD but i would love to see one that can play Online FPS Games. Like CounterStrike - Valorant - Overwatch - Apex Legends - etc

but for starters, it can play a Simple Training Ground like what Valorant Has. and once it passes that Training course you can put it against Bots in Counter-Strike (CS:GO)

and if it manages to win 51% or more you put against Humans in Freeplay and if gets again 51% or more Wins in Freeplay then you put it in COMP Just make sure the bot isn't using any Exploits that Humans haven't Discovered don't want the OP AI Cover to be blown xD.

yes it's a joke but not really joke cause if you do it would be Hilariously funny to see people not being able to beat a Bot xD

or simple enough make a AI see black and White and have Values on Those Colors that say this is Enemy and this is Ally xD Done xD

Plz do "AI learns to play Baldi's Basics"

Dislike because we never saw it learn anything :(

It did though! That's why it sometimes runs into the red blocks and dies or why it goes back and forth forever. It's learning during that time period. The learning here is a lot faster than some other environments since it learns every few steps it moves + it's an easier environment except it uses raw pixels as input.

@@Chrispresso

As a viewer though, this AI was disappointing because you didn’t show it go through enough generations to show it “worked”