JAX: accelerated machine learning research via composable function transformations in Python

HTML-код

- Опубликовано: 18 ноя 2020

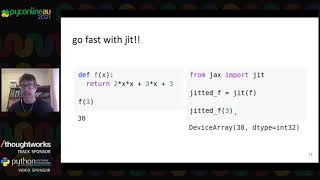

- JAX is a system for high-performance machine learning research and numerical computing. It offers the familiarity of Python+NumPy together with hardware acceleration, and it enables the definition and composition of user-wielded function transformations useful for machine learning programs. These transformations include automatic differentiation, automatic batching, end-to-end compilation (via XLA), parallelizing over multiple accelerators, and more. Composing these transformations is the key to JAX’s power and simplicity.

JAX had its initial open-source release in December 2018 (github.com/goo.... It’s used by researchers for a wide range of advanced applications, from studying training dynamics of neural networks, to probabilistic programming, to scientific applications in physics and biology.

Presented by Matthew Johnson